Let’s assume you have some sensitive data, that you need to protect by encryption. It might be credit card numbers (the usual example), social security numbers, or pretty much anything you consider sensitive. It does not matter if the encryption is mandated by a standard or if you just decided to encrypt the sensitive stuff. You need to do the encryption right and actually protecting the information in both cases. Unfortunately, full-disk-encryption and pgcrypto are not a good fit for multiple reasons, and application-level encryption reduces the database to “dumb” storage. Let’s look at an alternative approach – offloading the encryption to a separate trusted component, implemented as a custom data type.

Note: A couple of weeks ago at pgconf.eu 2018, I presented a lightning talk introducing a PoC of an alternative approach to encrypting data in a database. I got repeatedly asked about various details since then, so let me just explain it in this blog post.

FDE and pgcrypto

In the PostgreSQL world, people will typically recommend two solutions to this problem – full-disk encryption and pgcrypto. Unfortunately, neither of them really works for this use case 🙁

Full-disk encryption (FDE) is great. It’s transparent to the database (and application), so there are no implementation changes needed. The overhead is very low, particularly when your CPU supports AES-NI etc. The problem is it only really protects against someone stealing the disk. It does not protect against OS-level attacks (rogue sysadmin, someone gaining remote access to the box or backups, …). Nor does it protect against database-level attacks (think SQL injection). And most importantly, it’s trivial to leak the plaintext data into server log, various monitoring systems etc. Not great.

pgcrypto addresses some of these issues as the encryption happens in the database. But it means the database has to know the keys, and those are likely part of SQL queries and so the issue with leaking data into server logs and monitoring systems is still there. Actually, it’s worse because this time we’re leaking the encryption keys, not just the plaintext data.

Application-level encryption

So neither full-disk encryption nor pgcrypto is a viable solution to the problem at hand. There inherent issue with both solutions is that the database sees plaintext data (on input), and so can leak them into various output channels. In case of pgcrypto the database actually sees the keys, and leaking those is even deadlier.

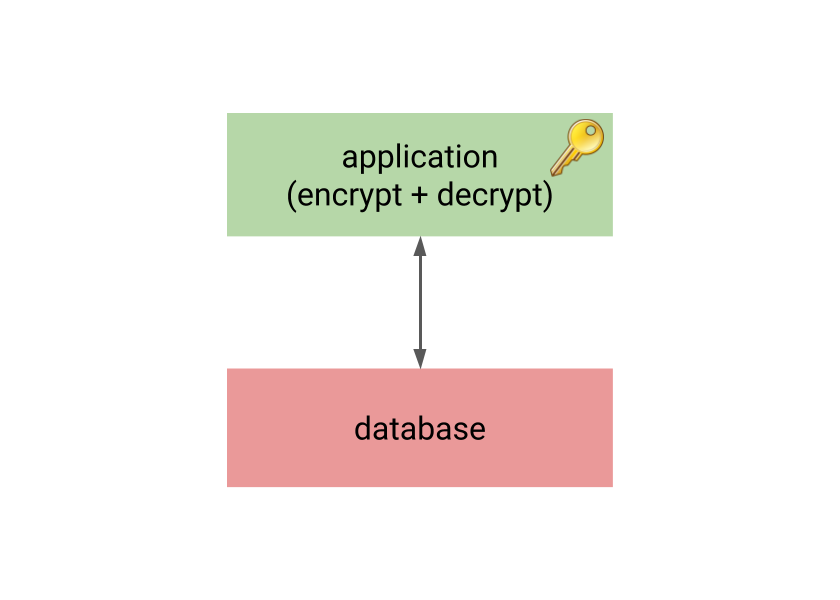

This is why many practical systems use application-level encryption – all the encryption/decryption happens in the application, and the database only sees encrypted data.

The unfortunate consequence is that the database acts as “dumb” storage, as it can’t do anything useful with the encrypted data. It can’t compare the plaintext values (it can’t even determine if two plaintext values are equal due to nonces), etc. That means it’s impossible to build indexes on the encrypted data, do aggregation, or anything that we expect from decent relational database.

There are workarounds for some of these issues. E.g. you may compute SHA-1 hash of the credit card number, build an index on it and use it for lookups, but this may weaken the encryption when the encrypted data have low entrophy (just like credit card numbers).

This means application-level encryption often results in a lot of the processing moves to the application, which is inefficient and error-prone. There must be a better solution …

Encryption off-loading

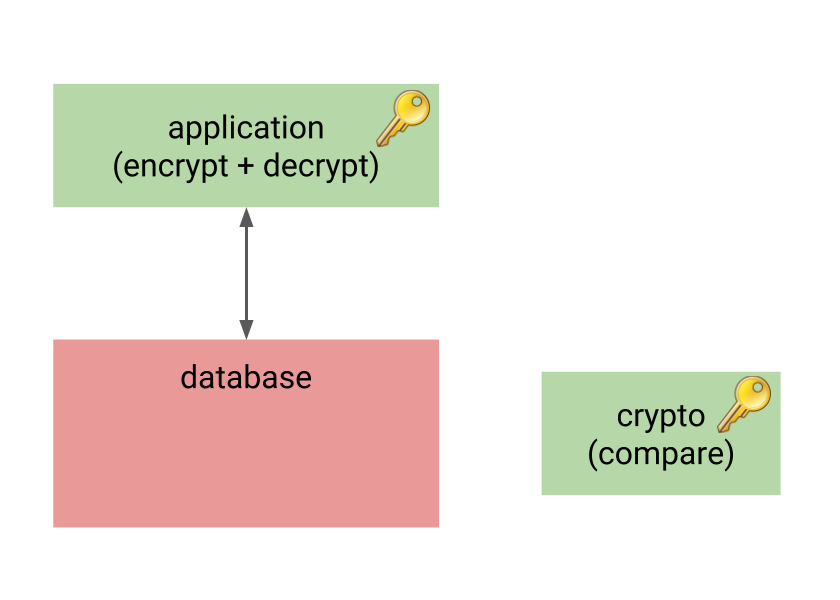

The good thing on application-level encryption is that the database does not know the plaintext or encryption keys, which makes the system safe. The problem is that the database has no way to perform interesting operations on the data, so a lot of the processing moves to the application level.

So let’s start with the application-level encryption, but let’s add another component to the system, performing the important operations on encrypted data on behalf of the database.

This component also knows the encryption keys, but it’s much smaller and simpler than the database. It’s only task is to receive encrypted data from the database, and perform some predefined operation(s) on it. For example, it might receive two encrypted values, decrypt and compare them, and return -1/0/1 just like regular comparator.

This way the database still does not know anything sensitive, yet it can meaningfully perform indexing, aggregation and similar tasks. And while there’s another component with the knowledge of encryption keys, it may be much simpler and smaller compared to the whole RDMBS, with much smaller attack surface.

The encryption component

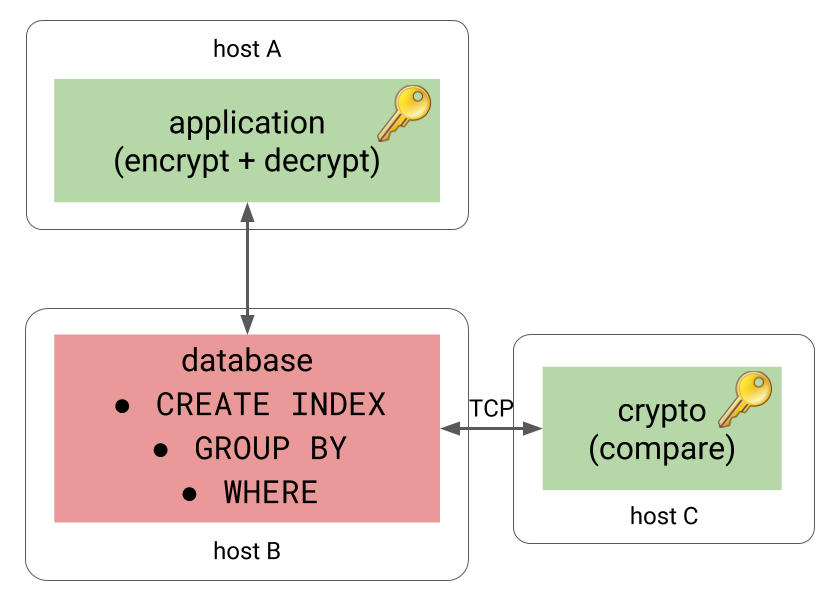

But what is a “component” in this context? It might be as simple as a small service running on a different machine, communicating over TCP.

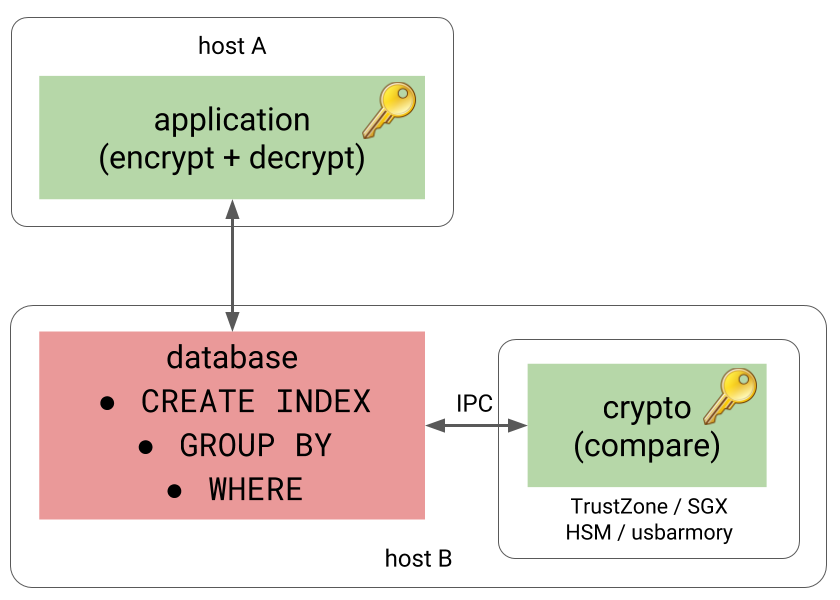

Or it might be a separate process running on the same host, providing better performance due to replacing TCP with some form of IPC communication.

A more elaborate version of this would be running the process in a trusted execution environment, providing additional isolation. Pretty much every mainstream CPU vendor has a way to do this – Intel has SGX, AMD has SEV, ARM has TrustZone. The component might also run on a HSM or a device like usbarmory.

Each solution has a different set of pros / cons / limitations / performance characteristics, of course.

ccnumber

So, how could this be implemented on the database side, without messing with the database internals directly too much? Thankfully, PostgreSQL is extremely extensible and among other things it allows implementing custom data types. And that’s exactly what we need here. The experimental ccnumber extension implements a custom data type, offloading comparisons to component, either over TCP or IPC (using POSIX memory queues).

The encryption is done using libsodium (docs), a popular and easy-to-use library providing all the important pieces (authenticated encryption, keyed hashing).

Performance

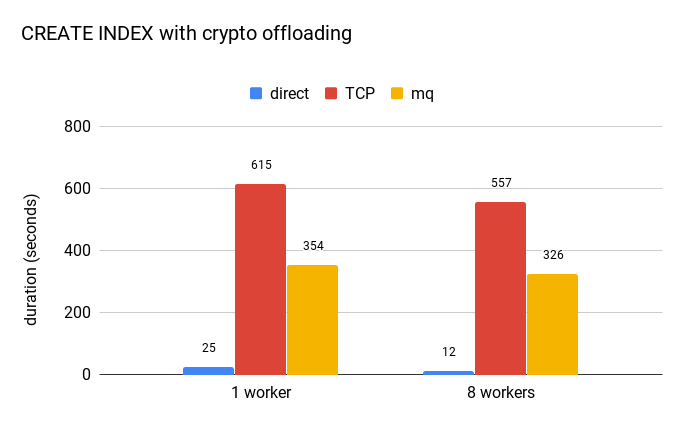

Offloading operations to a separate component is certainly slower than evaluating them directly, but how much? The extension is merely a PoC and so there’s certainly room for improvement, but a benchmark may provide at least some rough estimates. The following chart shows how long it takes to build an index on ccnumber on a table with 22M rows.

The blue bar represents an index created directly on the bytea value, representing the encrypted value. It’s clear the overhead is significant, creating the index is at least an order of magnitude slower (with TCP being twice slower than mq message queues). Furthermore, the custom data type can’t use sorting optimizations like abbreviated keys etc. (unlike the plain bytea type).

But this does not make this approach to encryption impractical. CREATE INDEX is very intensive in terms of number of comparisons, and you do it only very rarely in practice.

What probably matters much more is impact on inserts and lookups – and those operations actually do very few comparisons. It’s quite rare to see index with more than 5 or 6 levels, so you usually need very few comparisons to determine which leaf page to look at. And the other stuff (WAL logging, etc.) is not cheap either, so making the comparisons a bit more expensive won’t make a huge difference.

Moreover, an alternative to slightly more expensive index access is sending much more data to the application, and doing the filtering there.

The other observation is that increasing the number of workers does not speed CREATE INDEX significantly. The reason is that the parallel index build performs about 60% more comparisons compared to non-parallel one. And as those extra comparisons happen in the serial part of the algorithm, it limits the speedup (just like Amdahl’s law says).

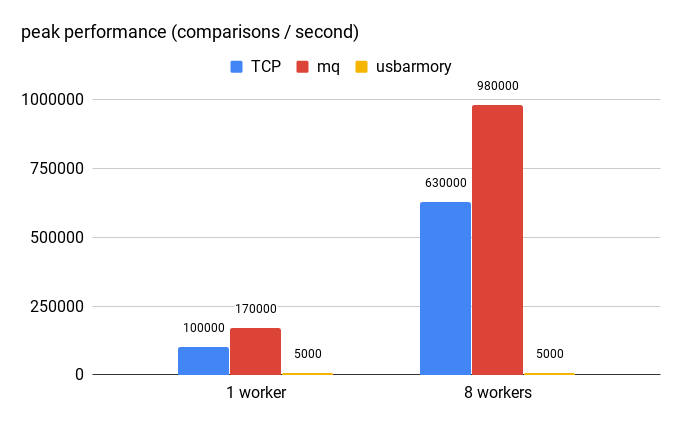

Another way to look at this is how fast can the crypto component evaluate requests from the database, illustrated by the following chart:

On this particular system (with Xeon E5-2620 v4 CPU, the TCP-based version handles up to 100k operations per second, and the MQ-based one handles about 170k. With 8 workers (matching the CREATE INDEX test) it can achieve about 630k / 1M ops.

For comparison, I’ve included also usbarmory, which is using a single-code NXP i.MX53 ARM® Cortex™-A8 CPU. Obviously, this CPU is much weaker compared to the Xeon, and handles only about 5000 operations per second. But it would still serve quite well as a custom HSM.

Summary

I hope this post demonstrates the offloading approach to encryption is viable, and solves the FDE and pgcrypto issues.

That is not to say the extension is complete or ready for production use, of course. One of the main missing pieces is key management – obtaining the keys, rotating then when needed, etc. The PoC extension has the keys hard-coded, but clearly that’s not a viable solution. Admittedly, key management is a very challenging topic on it’s own, but it also depends on how your application already does that – it seems natural to do it the same way here. I’d welcome feedback, suggestions or ideas how to approach this in a flexible manner.