We all realize how important it is to be able to analyze the data we gather and extract useful information from it. 2UDA is a step in that direction and aims to bring together data storage and management (PostgreSQL) with data mining and analysis (Orange).

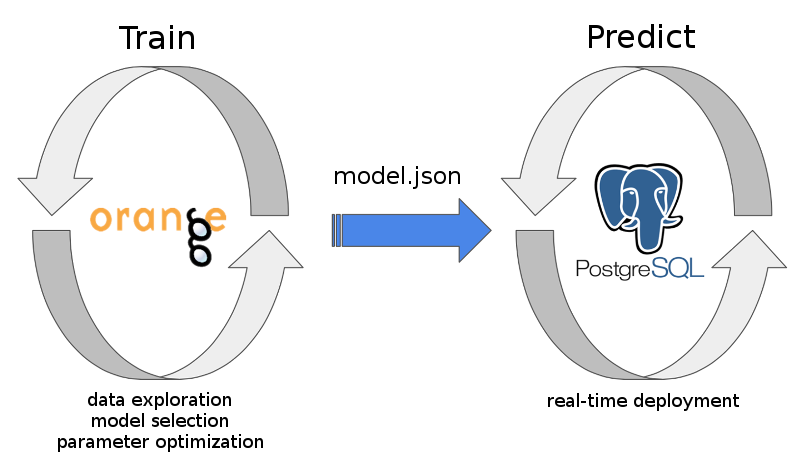

pgpredict is a project in development and aims to be the next step that will bring it all full circle. Starting with data (in our case stored in a database), we first need to give access to it to experts who can analyze it with specialized tools and methods. But afterwards, when for example they train a predictive model that can solve something important and beneficial for us, they need to be able to convey those results back so we can exploit them. This is precisely what pgpredict tries to solve – deploying predictive models directly inside the database for efficient and real-time execution.

The project started as a continuation of 2UDA, which already allows Orange to be used to work with data stored in a PostgreSQL database. What was needed was a way to export trained predictive models, transfer them to where they are needed (e.g. the production server) and deploy them. So the project is split into extensions for Orange that can export models to .json files, and for postgres that can load and run those models. Because the models are stored in text files, they can be tracked in a version control system. The json format also enables them to be easily stored in the database after loading, making use of PostgreSQL json capabilities.

Currently there exists a working implementation for a limited number of predictive models and it has not undergone thorough optimization yet. But it is already showing great promise.

To test it, I generated a table of imaginary customers with 10M rows with some independent random variables (age, wage, visits) and an output variable (spent). Orange was then used to load the table and obtain a predictive model. Because it makes use of TABLESAMPLE (a PostgreSQL 9.5 feature) trying different parameters and settings works quickly (even for data much larger than in this test). The data scientist can therefore interactively try different solutions, evaluate them, and come up with a good model in the end. The final ridge regression model was then exported and loaded into the database. There it can be used in real time to predict the amount spent for new customers appearing in the database.

Using pgbench showed that while selecting an existing column for a single customer from the table required 0.086 ms, it was only slightly longer to get the independent variables, and make a prediction for the value of spent: 0.134 ms.

Predicting the amount spent for 10^6 customers does not take 10^6 times more time (134 s) since model initialization is done the first time and then reused. So it actually took 13.6 s, making it about 10x faster.

These numbers were obtained for a simple model, on my laptop, with code that has potential for much more optimization. Expect a more rigorous evaluation soon, when we get ready to release pgpredict to the public. But even now, I think the exhibited efficiency and ease of use would make it a great advantage for a large majority of potential users looking for predictive analytics for their PostgreSQL powered data warehouses.