Introduction

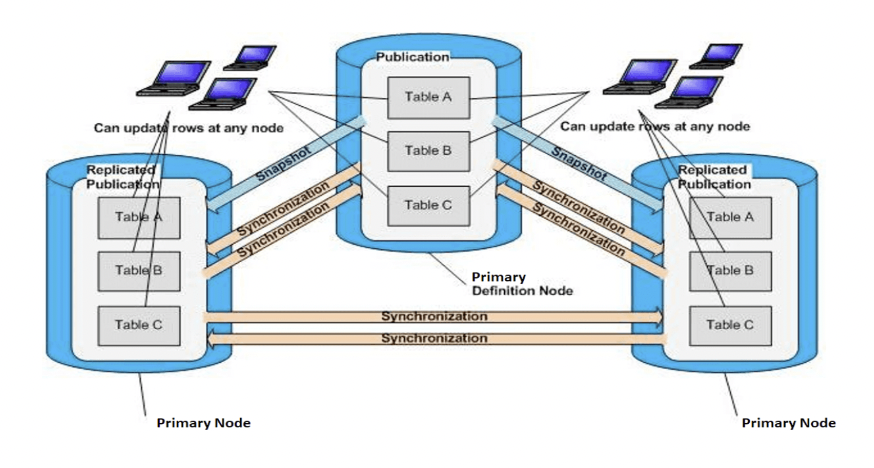

EDB provides the tool EDB Replication Server (xDB/EPRS) 7.4 that's a trigger or physical replication-based system with a managed process for data distribution. It can perform multi-master replication, but only with Postgres, and everything goes through the central distribution node.

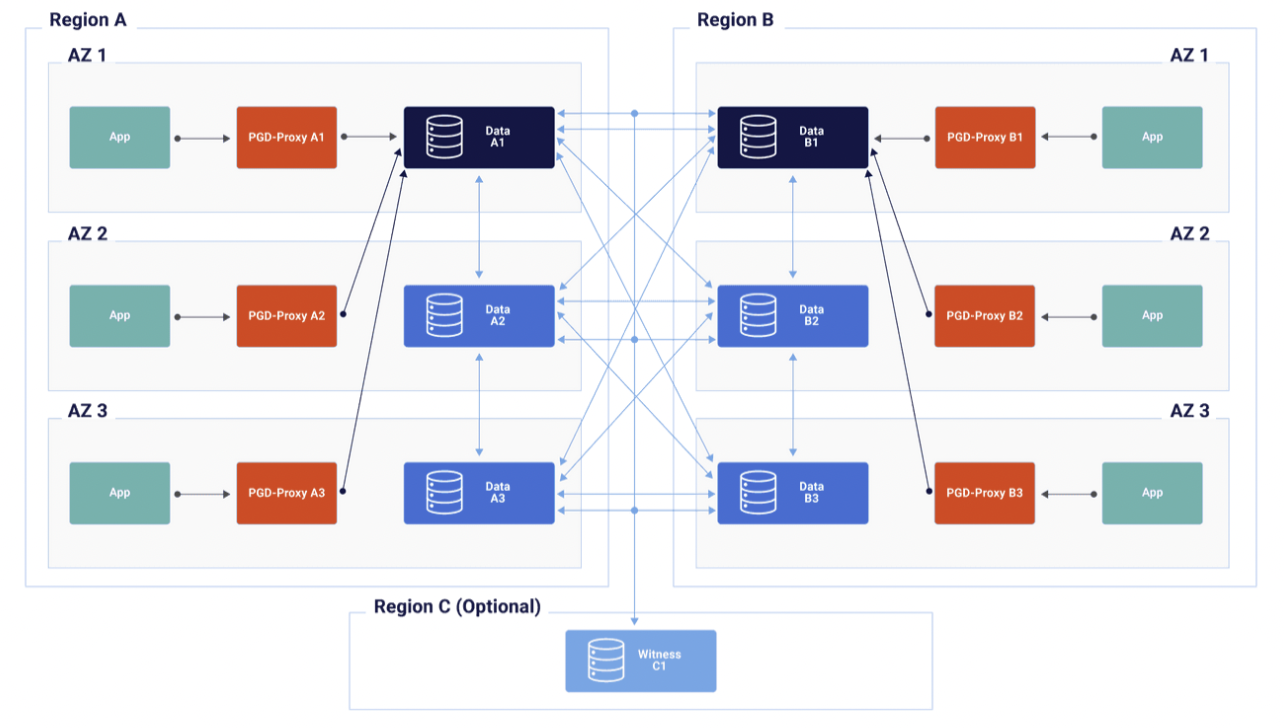

Now that Postgres Distributed (PGD) 5.0 is available, EDB has a tool that can handle multi-master replication without any external processes. It’s an extension to Postgres available from EDB where a mesh network is created and every node can communicate with every other node. This differentiates the product from a single distribution process like EDB Replication Server.

That raises the question: how do these tools compare? We can answer this question in a few ways.

For the purposes of this blog, we’ll focus on the performance aspect. How fast are they at replicating data from one database node to another? We’ll examine what performance benefits you can get by adopting the latest version of PGD 5 over EPRS 7.4.

EDB Postgres Replication Server (EPRS)

EDB Postgres Replication Server (EPRS) replicates data between Postgres databases in single-master or multi-master mode. It can also replicate from non-Postgres databases, such as Oracle and SQL Server, to Postgres in single-master mode.

This replication of data allows users to work with real data that yields real results that are reliable in more than one setting. Support of both single-master and multi-master replication gives Replication Server a broad range of supported use cases, including:

- Java replication technology for Bi-directional & Unidirectional replication

- Bi-Directional: Postgres to Postgres

- Uni-Directional: Replication from Postgres/other DBMS to target Postgres or vice versa

- Replication using WAL decoding or a trigger-based approach

More details on EDB Postgres Replication Server are available at the following link.

EDB Postgres Distributed (PGD)

EDB Postgres Distributed (PGD) provides multi-master replication and data distribution with advanced conflict management, data-loss protection, and throughput up to 5X faster than native logical replication.

PGD provides loosely coupled, multi-master logical replication using a mesh topology. This means that you can write to any server and the changes are sent directly, row-by-row, to all the other servers that are part of the same PGD group. The results include:

- Logical replication of data, schema, DDL, Sequences etc.

- Robust tooling to manage conflicts, monitor performance, and validate consistency

- Geo-fencing, allowing selective replication of data for security compliance and jurisdiction control

More details on EDB Postgres Distributed are available at the following link.

Performance comparison of Replication Server 7.4 vs Postgres Distributed 5.0

Now that we’ve gone over how each software offering works, let’s actually compare the performance of both under a specific system workload using TPROC-C (HammerDB).

Objective: Performance comparison of Replication Server 7.4, in Multi-Master Replication (MMR) mode, versus Postgres Distributed 5.0.

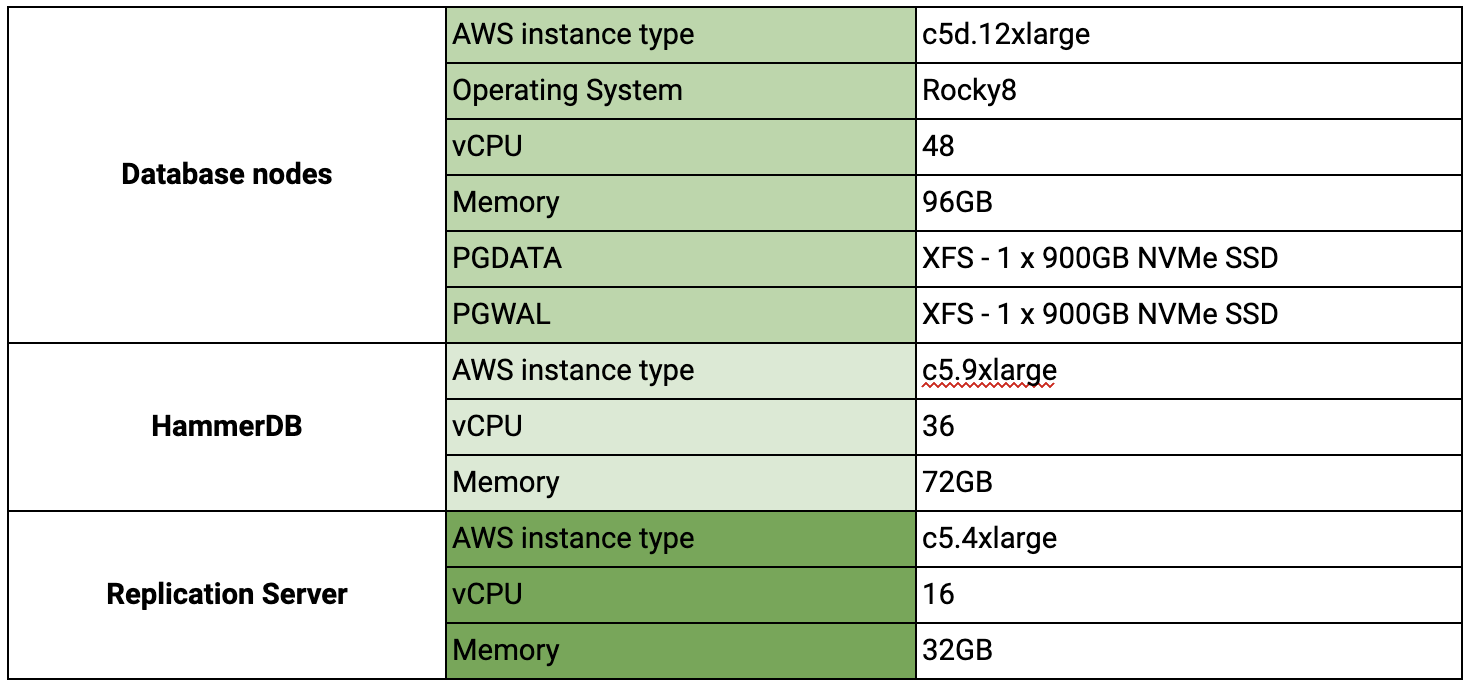

Benchmark Specifications :

- Target Architecture: 2 database nodes

- Data Replication: WAL records

- Workload Used: TPROC-C (HammerDB) with a ~200GB database

- Database Server: EPAS 14

- Test duration: 6 mins * 14

Evaluating data replication performance relies on measuring replication catchup time. Replication catchup time is measured in seconds with the help of a database function executed right after the end of the OLTP workload execution. This function fetches the current LSN position of the primary node, and then calculates the time interval required by the standby node to catch up to this initial LSN position during data replication.

System Characteristics

Evaluation And Findings

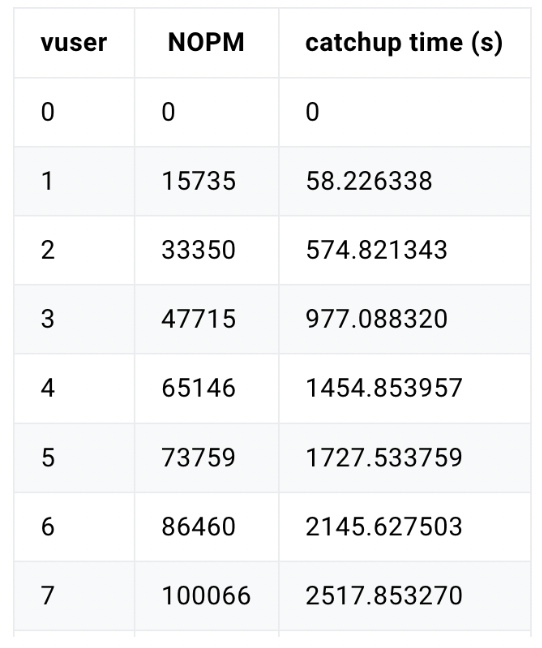

EDB Replication Server (EPRS 7.4)

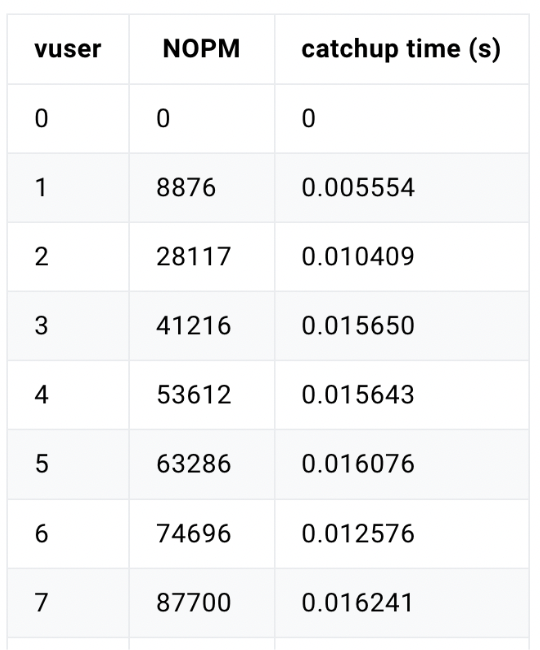

EDB Postgres Distributed (PGD 5)

EDB Replication Server (EPRS 7.4)

EDB Postgres Distributed (PGD 5)

In the above two graphs, for a varying number of HammerDB virtual users (from 1 to 14) executing the OLTP workload for 6 minutes (1 min ramp up, 5 mins steady), the following metrics are collected:

- NOPM (New-Order transactions executed Per Minute) rate, returned by HammerDB

- replication catchup time, in seconds

The break point here represents the replication latency starting point in both systems (EPRS 7.4 & PGD 5).

Sustainable TPM

Sustainable NOPM = (TC * TR) / (TR + CT)

Where:

- TC: Total NOPM

- TR: Total Run Time

- CT: Catch up time

Sustainable Throughput: The throughput that any system can sustain indefinitely without any latency.

The above calculation of measuring the sustainable TPM(transactions per minute) has been well explained in the following blog post.

Conclusion

This benchmark implies that the sustainable NOPM rates of EDB Postgres Distributed 5.0 is 126K which is 15.75 times more performant than EDB Postgres Replication Server 7.4’s NOPM rate of 8000 NOPM, making EDB Postgres Distributed a more suitable high availability product for Postgres multimaster replication.

The key contributing factors to this performance are:

- For the multi-master replication mode (MMR), EDB Postgres Distributed uses 16 writers whereas EDB Postgres Replication Server can engage only one database connection/worker in charge of applying the changes.

- EDB Postgres Replication Server uses SQL statements like (INSERT, UPDATE and DELETE) to apply the changes while EDB Postgres Distributed uses binary protocols. This is more performant because Postgres does not have to parse any SQL statements before writing the changes.

Want to learn more about EDB Postgres Distributed? Read more on our website! There’s a free trial of PGD 5.0 coming soon—sign up to be notified here.