In this article we are going to see how database servers work together to allow the second server to take over quickly if the primary server fails(high availability), and to allow multiple servers to serve the same data for SELECTs(horizontal read scalability).

Since both functionalities are not available in one product, we need to rely on external tools to provide this functionality and integrate them. EDB Postgres Failover Manager (EFM) is high-availability tool to monitor the health of Postgres Streaming Replication clusters to verify database failures quickly and automatically promote a standby node as primary without any data loss and with minimal service interruption(connection rollback). PgPool-II(pgpool) provides connection pooling and load balancing for horizontal scalability of SELECT queries on multiple standbys.

We need EDB Failover Manager (EFM) and PgPool-II functionalities to achieve high-availability and horizontal read scalability.

IMO, it would have been difficult to integrate EFM & PgPool, if the latest version of EFM 3.2 has not introduced Load Balancer Hooks(Thanks to EFM team). About EFM 3.2, it supports multiple ways to connect applications with the new master database after a failover or promotion. With latest version, two new script hooks introduced to execute before and after a database event of switchover or failover or standby node failure. These EFM new hooks can be used to update the PgPool and other load balancer configuration. All we need, write a simple script with set of commands to manipulate load balancer configuration on the database status and set the script in EFM load balancer hooks(script.load.balancer.attach / script.load.balancer.detach) in cluster properties file. When EFM takes action on any database event, these scripts hooks will be executed automatically and run load balancer configuration commands set in script to update the database node status. By this way, integration is transparent, well automated and with NO manual intervention in case of any database events.

Watch a short video demonstrating the integration with few testing scenarios.

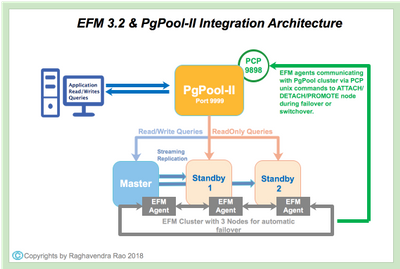

Architecture:

How EFM & PgPool Integration works ?

EFM Load Balancer script hooks(script.load.balancer.attach / script.load.balancer.detach) are called by “EFM” user for every database event (down/up/promotion) before or after the event accordingly. A simple script should be created with PgPool PCP unix commands in it to update the PgPool configuration. When EFM performs Switchover/Failover, a node role will be changed and “pcp_promote_node” command can be used to update PgPool-II cluster for new master similarly for any node down or up “pcp_attach_node” & “pcp_detach_node” command can be used to add/remove node from PgPool Cluster. Remember, since EFM script hooks call PCP unix commands we MUST configure password-less authentication between database and PgPool Node to avoid interruption of Switchover/Failover.

Note: When pgpool pcp_promote_node command exeucted to update a New Master information in an event of Switchover/Failover performed by EFM, the connections which are established to old Master or Standby nodes will be disconnected and reconnected to new master after promotion.

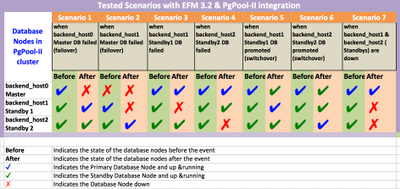

Tested Scenarios

Below are the list of scenarios tested in 1 Master and 2 Standby nodes architecture.

Note: Above scenarios are tested with EFM 3.2/PgPool-II 3.7/EPAS 10.x versions, behavior may vary if implemented with other versions. This article do not encourage to implement the architecture in production without thorough testing.

Let's proceed with setup.

Pre-Requisites

Servers:

- 3 Database Nodes (1 Master & 2 Asynchronous Standbys)

- 1 PgPool-II Node

Components:

- EDB Postgres Advanced Server 10.x (EPAS 10.x)

- EDB Postgres Failover Manager 3.2 (EFM 3.2)

- PgPool 3.7 (any version of 3.x supported)

- Operating System of all the servers - CentOS 7.x version(64 Bit)

Installation

In this section, we will cover the installation of all components required on each server as per the architecture. We are going to use RPM method of installation for all the components. Refer to the documentation link tagged with each component to complete the installation.

On PgPool Node:

On 3 Database Nodes:

- Install EDB Postgres Advanced Server 10.x (EPAS)

- Install EDB Failover Manager 3.2 (EFM)

- Install PgPool Binaries (on database nodes we need ONLY pgpool binaries to run PCP unix commands. No pgpool configuration or services should be started)

Configuration

In this section, we are going to cover ONLY very important configuration required for EFM & PgPool integration. Streaming Replication & EFM configuration are not covered in details for the clarity reason of the article.

- Configure Asynchronous Streaming Replication between 1 Master & 2 Standby nodes.

- Configure EFM Cluster by adding Master & 2 Async Standby nodes.

While configuring EFM, do below parameter changes to <clustername>.properties file on each database node.

db.user=enterprisedb

db.password.encrypted=<encrypted password>

db.port=5444

db.database=edb

db.service.owner=enterprisedb

db.service.name=edb-as-10.service

db.recovery.conf.dir=/var/lib/edb/as10/data

user.email=<email address>

bind.address=MASTER_IP:7800 //Masked the IP address

admin.port=7809

is.witness=false

auto.allow.hosts=true

stable.nodes.file=true

auto.failover=true

auto.reconfigure=true

promotable=true

script.load.balancer.attach=/path/to/script/location/efm_loadbalancer_attach.sh %h

script.load.balancer.detach=/path/to/script/location/efm_loadbalancer_detach.sh %h

efm.loglevel=FINENote: Change Node IP address as per Master/Standby 1/Standby 2 and rest all remain same on all the nodes.

While configuring PgPool, do below changes to parameters in $PGPOOL_PATH/etc/pgpoo.conf file.

backend_hostname0 = 'MASTER_IP'

backend_port0 = 5444

backend_weight0 = 1

backend_data_directory0 = '/var/lib/edb/as10/data'

backend_flag0 = 'ALLOW_TO_FAILOVER'

backend_hostname1 = 'STANDBY_IP_1'

backend_port1 = 5444

backend_weight1 = 1

backend_data_directory1 = '/var/lib/edb/as10/data'

backend_flag1 = 'ALLOW_TO_FAILOVER'

backend_hostname2 = 'STANDBY_IP_2'

backend_port2 = 5444

backend_weight2 = 1

backend_data_directory2 = '/var/lib/edb/as10/data'

backend_flag2 = 'ALLOW_TO_FAILOVER'

enable_pool_hba = on

load_balance_mode = on

sr_check_user = 'enterprisedb'

sr_check_password = '<enterprisedb user password>'

sr_check_database = 'edb'

health_check_user = 'enterprisedb'

health_check_password = '<enterprisedb user password>'

health_check_database = 'edb'

delay_threshold = <adjust as per the Master/Standby delay>

fail_over_on_backend_error = off

search_primary_node_timeout = 3Note: Above parameters are mostly relevant to EFM & PgPool integration. It may vary if you want to add PgPOOL HA(Watchdog).

- Configure PCP commands on each Database Node

- Connect to the database node

- Switch as “EFM” user

#su - efm

-bash-4.2$ pwd

/var/efm/- Create PCP password file with pgpool node hostname, pcp user, pcp port and pcp password.

echo "PGPOOL_HOSTIP:PCPPORT:PCPUSER:PCPPASSWORD" > ~/pcppass

Eg:-

-bash-4.2$ more pcppass

172.16.23.23:9898:enterprisedb:edb- Change the "pcppass" file permission by disallowing world or group

-bash-4.2$ chmod 0600 ~/pcppass - Test the PCP command to connect to pgpool PCP port on 9898 by setting terminal environment variable PCPPASSFILE. Remember, PCP command should executed successfully without prompting for the password.

Note: Above PCP configuration steps should be followed on each database node. All nodes should pass the password-less authentication for a successful PgPool integration.$ export PCPPASSFILE=/var/efm/pcppass $ /usr/edb/pgpool3.6/bin/pcp_node_count -h <pgpool_node_ip> -U enterprisedb -p 9898 -w - Configure EFM load balancer scripts hooks on each Database Nodes.

- Connect to the database node

- Switch as “EFM” user

#su - efm -bash-4.2$ pwd /var/efm/ - Create directory as “EFM” user to store load balancer script hooks and it use also used for script logging.

-

-bash-4.2$ mkdir efm-scripts -bash-4.2$ cd efm-scripts - Create two scripts with the content shared in this article below or clone from GitHub repository to the “efm-scripts” location.

- efm_loadbalancer_attach.sh

- efm_loadbalancer_detach.sh

- Edit the script and adjust the EPAS, PGPOOL & PORTS as per your environment.

Start the Services

After installation/configuration, start the services of each components involved in architecture.

- Start EPAS service on master & 2 standby nodes (systemctl start edb-as-10)

- Start EFM service on master & 2 standby nodes (systemctl start efm-32)

- After starting you check the efm cluster status using “efm cluster-status <clustername>”

- Start PgPool service on PgPool Node (systemctl start pgpool.service)

Two EFM Load Balancer Scripts:

Two user-friendly scripts "efm_loadbalancer_attach.sh” and “efm_loadbalancer_detach.sh” written in BASH and they are wrapped with PCP unix commands which are executed by EFM Load balancer attach/detach hooks as “efm” user. Refer to GitHub Repository for sample configuration files and scripts. Please feel free to clone & use the scripts, if there's a scope of improvement or correction then don't hestitate to raise issue in the repository.

Note: Including script content in this article may risk of missing the real context of the article by readers, hence those details are moved to GitHub Repository Page.

After completing all the above steps, let's run few command and see the outputs from PgPool Load balancer for an EFM database event.

Testing Outputs

Consistent State Before Testing:

After configuring Asynchronous Streaming Replication, EDB Failover Manager Cluster & PgPool-II load balancing, below output shows the nodes status in PgPool.

[root@frontend-server ~]# /bin/psql -p 9999 -U enterprisedb edb -c 'show pool_nodes;'

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+------+--------+-----------+---------+------------+-------------------+-------------------

0 |MASTER_IP | 5444 | up | 0.333333 |primary | 2 | true | 0

1 | STANDBY1_IP | 5444 | up | 0.333333 | standby | 10 | false | 0

2 | STANDBY2_IP | 5444 | up | 0.333333 | standby | 6 | false | 0

(3 rows)

PgPool Nodes Status after Switchover

In EFM, switchover can be performed using “efm promote <cluster name> -quiet -switchover” command. One of the priority standby will take the role of primary when performed switchover and old primary will be attached as standby to new primary. During this EFM operation, load balancer hooks will connect to pgpool cluster via pcp command to manipulate the nodes roles.

[root@frontend-server ~]# /bin/psql -p 9999 -U enterprisedb edb -c 'show pool_nodes;'

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+------+--------+-----------+---------+------------+-------------------+-------------------

0 | STANDBY1_IP| 5444 | up | 0.333333 |standby | 2 | false | 0

1 |MASTER_IP | 5444 | up | 0.333333 | primary | 10 | true | 0

2 | STANDBY2_IP |5444 | up | 0.333333 | standby | 6 | false | 0

(3 rows)

Note: Above result after the Switchover from the consistent state.

PgPool Nodes Status after Failover

In EFM, manual failover can be performed using “efm promote <cluster name>” command. One of the priority standby will take the role of primary when performed failover and old primary will be detached. During this EFM operation, load balancer hooks will connect to pgpool cluster via pcp command to manipulate the nodes roles.

[root@frontend-server ~]# /bin/psql -p 9999 -U enterprisedb edb -c 'show pool_nodes;'

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+------+--------+-----------+---------+------------+-------------------+-------------------

0 |MASTER_IP | 5444 | down | 0.333333 |standby | 2 | false | 0

1 | STANDBY1_IP | 5444 | up | 0.333333 | primary | 10 | true | 0

2 | STANDBY2_IP | 5444 | up | 0.333333 | standby | 6 | false | 0

(3 rows)

Note: Above result after the manual EFM Failover from the consistent state.

That's all. Hope it helps.