Here we will outline the steps for creating and configuring the EDB Postgres platform in your AWS account using the PostgreSQL deployment scripts made available on GitHub.

With these deployment scripts, you can set up the PostgreSQL database of your choice (PG/EDB Postgres), and, in addition, the scripts will deploy and configure the tools that would take care of high availability and automatic failover and also set up monitoring for the PostgreSQL cluster.

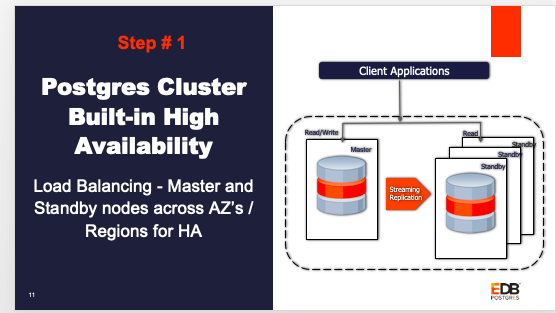

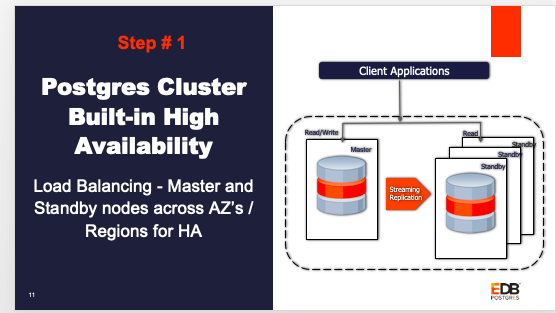

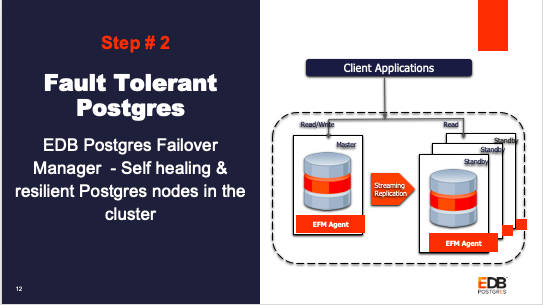

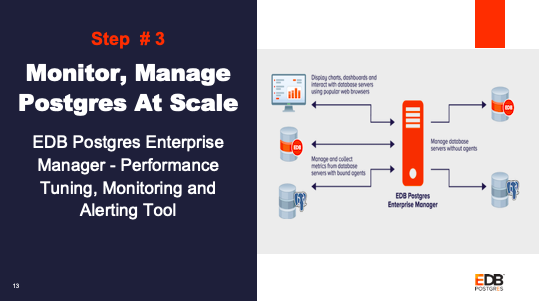

Let’s see what the architecture is going to look like:

Step 1: Create a Postgres cluster of your choice (3 node: 1 Master, 2 Standby).The database nodes would have replication set up between the master and standby nodes. Users have an option to choose the replication type, either Synchronous or Asynchronous.

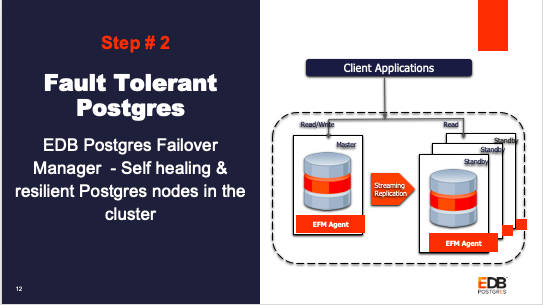

Step 2: Enable High Availability & Resiliency using EDB Failover Manager (EFM) for the Postgres cluster just provisioned. EFM agents are set up for each database node and will be responsible for failover management.

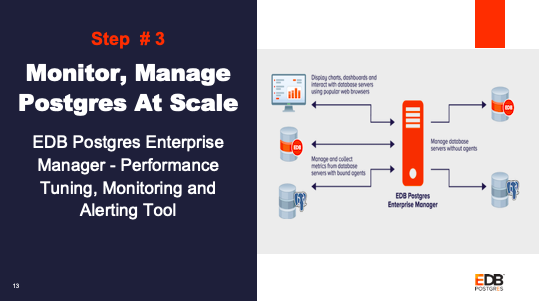

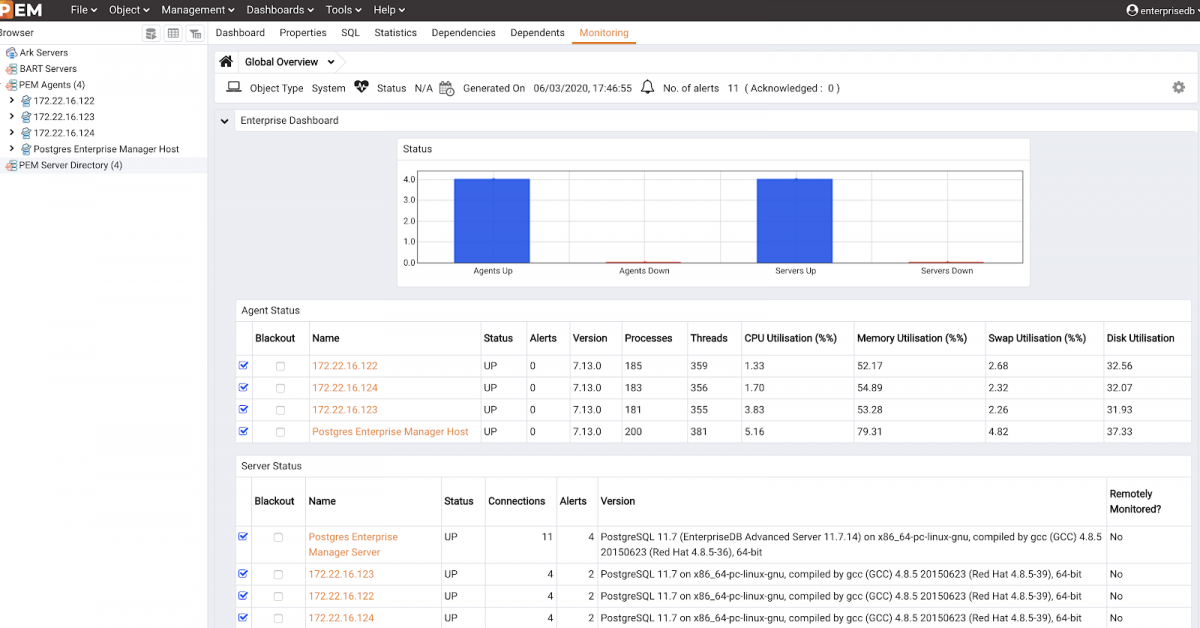

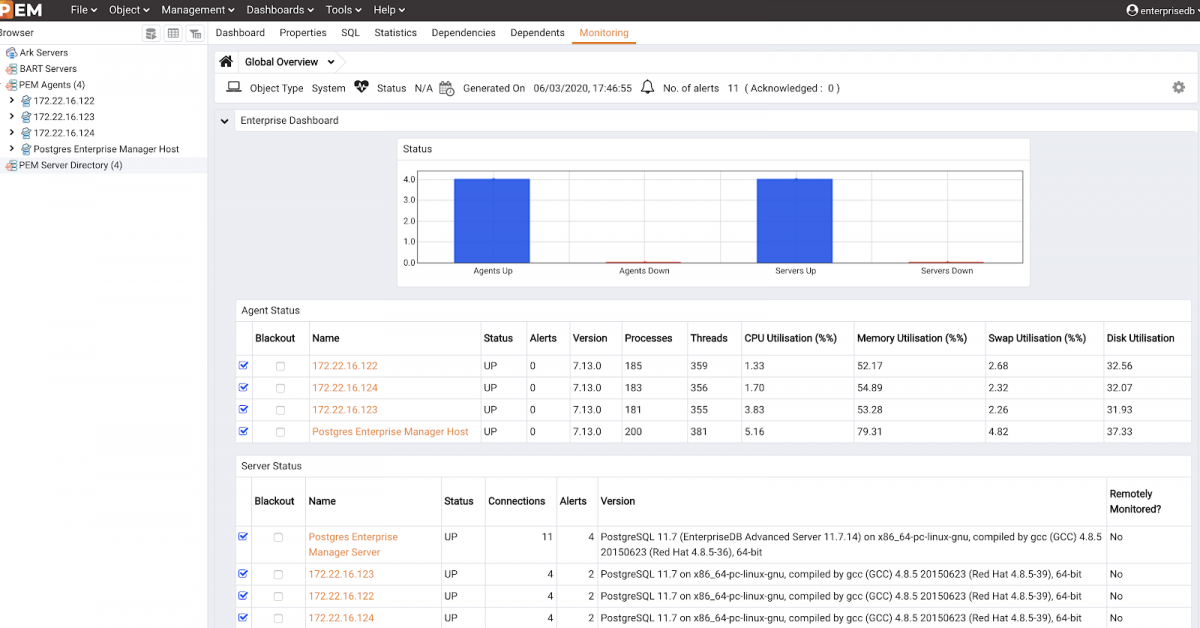

Step 3: Enable Monitoring, Alerting & Tuning using EDB Postgres Enterprise Manager(PEM).The PEM Server is set up for monitoring the database. PEM agents are monitoring the database nodes and sending the data to the PEM server.

Clone the GitHub Repository

Clone/Download the Postgres deployment scripts from the EnterpriseDB GitHub Repository

Once you clone the files, the directory structure will look like this:

laptop154-ma-us:postgres-deployments loaner$ ls -ll

total 8

drwxr-xr-x 10 loaner staff 320 Mar 2 11:25 DB_Cluster_AWS

drwxr-xr-x 10 loaner staff 320 Mar 2 09:44 DB_Cluster_VMWARE

drwxr-xr-x 10 loaner staff 320 Mar 2 11:12 EFM_Setup_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:21 EFM_Setup_VMWARE

drwxr-xr-x 10 loaner staff 320 Mar 2 12:17 Expand_DB_Cluster_AWS

drwxr-xr-x 7 loaner staff 224 Feb 11 12:27 Expand_DB_Cluster_VMWARE

drwxr-xr-x 10 loaner staff 320 Mar 2 11:03 PEM_Agent_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:21 PEM_Agent_VMWARE

drwxr-xr-x 10 loaner staff 320 Mar 2 09:51 PEM_Server_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:22 PEM_Server_VMWARE

laptop154-ma-us:postgres-deployments loaner$

|

We have used CentOS 7 as the base AMI for the creation of an instance.

Here is the list of prerequisites to get you started. You can use your laptop or any other machine to deploy the PostgreSQL platform on AWS. I did it on my MacBook.

- Terraform installed on your machine.

- Ansible installed on your machine.

- IAM users with programmatic access.

- VPC created on your AWS account in the region of your choice.

- Minimum 3 subnets created in your VPC with public IP auto-enabled.

- Key pair created in advance for ssh.

- Subscription for EDB yum repository (If using EDB Postgres).

- Optional IAM role created.

- S3 bucket created for backup.

Step 1: Create a PostgreSQL Cluster (3 node: 1 Master, 2 Standby).

The following steps will create 3 node clusters.

Go inside the folder DB_Cluster_AWS and edit the file edb_cluster_input.tf using your preferred editor to input the values for the mandatory parameters listed in the file and save it.

If you need any help filling in any of the parameters please check out the GitHub Wiki page, which describes each parameter in detail. The optional parameters gives you an option to fill any customized value you want or to use the default values included in the input file.

Terraform Deploy

Before executing this input file you need to provide some environmental variable as follows:

export AWS_ACCESS_KEY_ID=Your_AWS_Access_ID

export AWS_SECRET_ACCESS_KEY=Your_AWS_Secret_Key_ID

These are AWS credentials for creating a resource in your AWS account. Terraform needs this before we execute a Terraform config file.

export PATH=$PATH:/path_of_terraform_binary_file_directory

This is an absolute path of the Terraform binary file you downloaded while installing Terraform. Once you have done this, run following command to run terraform config file :terraform init

laptop154-ma-us:DB_Cluster_AWS loaner$ terraform init

Initializing modules...

Initializing the backend...

Initializing provider plugins...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.aws: version = "~> 2.48"

* provider.null: version = "~> 2.1"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

laptop154-ma-us:DB_Cluster_AWS loaner$

laptop154-ma-us:DB_Cluster_AWS loaner$

terraform apply

This will prompt you to enter a region where resources are going to be created. Please supply region-name. Make sure you are supplying the same region-name where the VPC was created and provided in the Terraform input config file.

You will be prompted to enter Yes to create the resource. Type Yes and hit the Enter key. This will start the process of creating and configuring the DB cluster.

Once it completes successfully, It will output the cluster output details, and you can see the cluster IP address (both public and private) on the screen.

laptop154-ma-us:DB_Cluster_AWS loaner$ terraform apply

var.region_name

AWS Region Code like us-east-1,us-west-1

Enter a value: us-east-1

module.edb-db-cluster.data.aws_ami.centos_ami: Refreshing state...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.edb-db-cluster.aws_eip.master-ip will be created

+ resource "aws_eip" "master-ip" {

+ allocation_id = (known after apply)

+ association_id = (known after apply)

+ domain = (known after apply)

+ id = (known after apply)

+ instance = (known after apply)

+ network_interface = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ public_ipv4_pool = (known after apply)

+ vpc = true

}

# module.edb-db-cluster.aws_instance.EDB_DB_Cluster[0] will be created

+ resource "aws_instance" "EDB_DB_Cluster" {

+ ami = "ami-0c3b960f8440c7d71"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ iam_instance_profile = "role-for-terraform"

+ id = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = "pgdeploy"

+ network_interface_id = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ subnet_id = "subnet-e0621bef"

+ tags = {

+ "Created_By" = "Terraform"

+ "Name" = "epas12-master"

}

+ tenancy = (known after apply)

+ volume_tags = (known after apply)

+ vpc_security_group_ids = (known after apply)

+ ebs_block_device {

+ delete_on_termination = (known after apply)

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ snapshot_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = (known after apply)

+ volume_type = (known after apply)

}

+ ephemeral_block_device {

+ device_name = (known after apply)

+ no_device = (known after apply)

+ virtual_name = (known after apply)

}

+ network_interface {

+ delete_on_termination = (known after apply)

+ device_index = (known after apply)

+ network_interface_id = (known after apply)

}

+ root_block_device {

+ delete_on_termination = true

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = 8

+ volume_type = "gp2"

}

}

# module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] will be created

+ resource "aws_instance" "EDB_DB_Cluster" {

+ ami = "ami-0c3b960f8440c7d71"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ iam_instance_profile = "role-for-terraform"

+ id = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = "pgdeploy"

+ network_interface_id = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ subnet_id = "subnet-cffa7da8"

+ tags = {

+ "Created_By" = "Terraform"

+ "Name" = "epas12-slave1"

}

+ tenancy = (known after apply)

+ volume_tags = (known after apply)

+ vpc_security_group_ids = (known after apply)

+ ebs_block_device {

+ delete_on_termination = (known after apply)

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ snapshot_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = (known after apply)

+ volume_type = (known after apply)

}

+ ephemeral_block_device {

+ device_name = (known after apply)

+ no_device = (known after apply)

+ virtual_name = (known after apply)

}

+ network_interface {

+ delete_on_termination = (known after apply)

+ device_index = (known after apply)

+ network_interface_id = (known after apply)

}

+ root_block_device {

+ delete_on_termination = true

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = 8

+ volume_type = "gp2"

}

}

# module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2] will be created

+ resource "aws_instance" "EDB_DB_Cluster" {

+ ami = "ami-0c3b960f8440c7d71"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ iam_instance_profile = "role-for-terraform"

+ id = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = "pgdeploy"

+ network_interface_id = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ subnet_id = "subnet-e0621bef"

+ tags = {

+ "Created_By" = "Terraform"

+ "Name" = "epas12-slave2"

}

+ tenancy = (known after apply)

+ volume_tags = (known after apply)

+ vpc_security_group_ids = (known after apply)

+ ebs_block_device {

+ delete_on_termination = (known after apply)

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ snapshot_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = (known after apply)

+ volume_type = (known after apply)

}

+ ephemeral_block_device {

+ device_name = (known after apply)

+ no_device = (known after apply)

+ virtual_name = (known after apply)

}

+ network_interface {

+ delete_on_termination = (known after apply)

+ device_index = (known after apply)

+ network_interface_id = (known after apply)

}

+ root_block_device {

+ delete_on_termination = true

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = 8

+ volume_type = "gp2"

}

}

# module.edb-db-cluster.aws_security_group.edb_sg[0] will be created

+ resource "aws_security_group" "edb_sg" {

+ arn = (known after apply)

+ description = "Rule for edb-terraform-resource"

+ egress = [

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 0

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "-1"

+ security_groups = []

+ self = false

+ to_port = 0

},

]

+ id = (known after apply)

+ ingress = [

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 22

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 22

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 5432

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 5432

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 5444

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 5444

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 7800

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 7900

},

]

+ name = "edb_security_groupforsr"

+ owner_id = (known after apply)

+ revoke_rules_on_delete = false

+ vpc_id = "vpc-de8457a4"

}

# module.edb-db-cluster.null_resource.configuremaster will be created

+ resource "null_resource" "configuremaster" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.edb-db-cluster.null_resource.configureslave1 will be created

+ resource "null_resource" "configureslave1" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.edb-db-cluster.null_resource.configureslave2 will be created

+ resource "null_resource" "configureslave2" {

+ id = (known after apply)

+ triggers = (known after apply)

}

# module.edb-db-cluster.null_resource.removehostfile will be created

+ resource "null_resource" "removehostfile" {

+ id = (known after apply)

}

Plan: 9 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

module.edb-db-cluster.aws_security_group.edb_sg[0]: Creating...

module.edb-db-cluster.aws_security_group.edb_sg[0]: Creation complete after 2s [id=sg-068c4cbad3d5ea7f2]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2]: Creating...

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[0]: Creating...

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1]: Creating...

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2]: Still creating... [10s elapsed]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[0]: Still creating... [10s elapsed]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1]: Still creating... [10s elapsed]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[0]: Still creating... [20s elapsed]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2]: Still creating... [20s elapsed]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1]: Still creating... [20s elapsed]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1]: Provisioning with 'local-exec'...

db-db-cluster.aws_instance.EDB_DB_Cluster[2] (local-exec): changed: [35.153.144.7]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2] (local-exec): TASK [Configure epass-12] ******************************************************

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2] (local-exec): skipping: [35.153.144.7]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2] (local-exec): TASK [Start DB Service] ********************************************************

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): [WARNING]: Consider using 'become', 'become_method', and 'become_user' rather

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): than running sudo

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): changed: [3.216.125.55]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): TASK [Configure epass-12] ******************************************************

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): skipping: [3.216.125.55]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): TASK [Start DB Service] ********************************************************

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): changed: [3.216.125.55]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): PLAY RECAP *********************************************************************

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1] (local-exec): 3.216.125.55 : ok=12 changed=11 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[1]: Creation complete after 4m20s [id=i-0307cbe0293366e71]

module.edb-db-cluster.aws_instance.EDB_DB_Cluster[2] (local-exec): changed: [35.153.144.7]

module.edb-db-cluster.null_resource.configuremaster (local-exec): TASK [Create replication role] *************************************************

module.edb-db-cluster.null_resource.configuremaster (local-exec): skipping: [3.233.45.172]

module.edb-db-cluster.null_resource.configuremaster (local-exec): TASK [Add entry in pg_hba file] ************************************************

module.edb-db-cluster.null_resource.configuremaster (local-exec): changed: [3.233.45.172]

Apply complete! Resources: 9 added, 0 changed, 0 destroyed.

Outputs:

DBENGINE = epas12

Key-Pair = pgdeploy

Key-Pair-Path = /Users/loaner/Downloads/pgdeploy.pem

Master-PrivateIP = 172.31.65.116

Master-PublicIP = 3.233.45.172

Region = us-east-1

S3BUCKET = kanchan/pgtemplate

Slave1-PrivateIP = 172.31.3.196

Slave1-PublicIP = 3.216.125.55

Slave2-PrivateIP = 172.31.75.72

Slave2-PublicIP = 35.153.144.7

|

Access the PostgreSQL cluster

As Terraform outputs the Master and Standby node IPs, you can connect to the Master database using your preferred client, pgAdmin or psql, and do a data load. Or, you can ssh into the Master instance and connect to the database. Remember to use the same credentials you used in the input file when Terraform provisioned your database node.

Confirm that the streaming replication is set up and working as expected by doing a select query on one of your standby nodes or checking the replication status using the following query:

laptop154-ma-us:Downloads loaner$ ssh -i pgdeploy.pem centos@3.233.45.172

Last login: Wed Mar 4 13:45:56 2020 from c-73-253-67-129.hsd1.ma.comcast.net

[centos@ip-172-31-65-116 ~]$ sudo -i

[root@ip-172-31-65-116 ~]#

[root@ip-172-31-65-116 ~]#

[root@ip-172-31-65-116 ~]# psql postgres postgres

psql (12.2.3)

Type "help" for help.

postgres=#

postgres=# select version();

version

---------------------------------------------------------------------------------------------------------------------

--------------------------

PostgreSQL 12.2 (EnterpriseDB Advanced Server 12.2.3) on x86_64-pc-linux-gnu, compiled by gcc (GCC) 4.8.5 20150623 (

Red Hat 4.8.5-36), 64-bit

(1 row)

postgres=# create table test1( i int);

CREATE TABLE

postgres=#

postgres=# insert into test1 values( generate_series(1,2000));

INSERT 0 2000

postgres=#

postgres=#

postgres=# select * from pg_stat_replication;

pid | usesysid | usename | application_name | client_addr | client_hostname | client_port | backend_st

art | backend_xmin | state | sent_lsn | write_lsn | flush_lsn | replay_lsn | write_lag | flus

h_lag | replay_lag | sync_priority | sync_state | reply_time

------+----------+------------+------------------+--------------+-----------------+-------------+--------------------

--------------+--------------+-----------+-----------+-----------+-----------+------------+-----------------+--------

---------+-----------------+---------------+------------+----------------------------------

4045 | 16384 | edbrepuser | slave1 | 172.31.3.196 | | 47052 | 02-MAR-20 14:43:55.

950239 +00:00 | | streaming | 0/90385D0 | 0/90385D0 | 0/90385D0 | 0/90385D0 | 00:00:00.001205 | 00:00:0

0.001918 | 00:00:00.002007 | 1 | quorum | 04-MAR-20 15:43:46.760557 +00:00

4054 | 16384 | edbrepuser | slave2 | 172.31.75.72 | | 52630 | 02-MAR-20 14:43:56.

800764 +00:00 | | streaming | 0/90385D0 | 0/90385D0 | 0/90385D0 | 0/90385D0 | 00:00:00.000501 | 00:00:0

0.001123 | 00:00:00.0012 | 1 | quorum | 04-MAR-20 15:43:46.757691 +00:00

(2 rows)

postgres=# \q

[root@ip-172-31-65-116 ~]#

[root@ip-172-31-65-116 ~]#

|

Step 2: EFM agents are set up for each database node and will be responsible for failover management.

In this section, we will walk you through steps of setting up EFM on the database cluster that was created in Step 1.

Go inside the folder EFM_Setup_AWS and edit the file edb_efm_cluster_input.tf using your preferred editor to input the values for the mandatory parameters listed in the file and save it.

If you need any help filling in any of the parameters please check out the GitHub Wiki page, which describes each parameter in detail. The optional parameters give you an option to fill any customized value you want or to use the default values included in the input file.

laptop154-ma-us:postgres-deployments loaner$ cd EFM_Setup_AWS/

laptop154-ma-us:EFM_Setup_AWS loaner$

laptop154-ma-us:EFM_Setup_AWS loaner$ ls -ll

total 80

drwxr-xr-x 7 loaner staff 224 Jan 28 10:28 EDB_EFM_SETUP

-rw-r--r-- 1 loaner staff 1500 Jan 28 10:28 README.md

-rw-r--r-- 1 loaner staff 19342 Jan 28 10:28 ansible.cfg

-rw-r--r-- 1 loaner staff 1005 Feb 12 11:44 edb_efm_cluster_input.tf

-rw-r--r-- 1 loaner staff 1447 Feb 11 12:27 licence.txt

-laptop154-ma-us:EFM_Setup_AWS loaner$

laptop154-ma-us:EFM_Setup_AWS loaner$

Terraform Deploy

Run the following command to start setting up the EFM cluster:

terraform init

laptop154-ma-us:EFM_Setup_AWS loaner$ terraform init

Initializing modules...

Initializing the backend...

Initializing provider plugins...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.null: version = "~> 2.1"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

laptop154-ma-us:EFM_Setup_AWS loaner$

|

terraform apply

This will prompt you to confirm setting EFM on the database cluster we created in Step 1. Type Yes and hit Enter to proceed. Once finished you can see resource created messages on your terminal.

laptop154-ma-us:EFM_Setup_AWS loaner$

laptop154-ma-us:EFM_Setup_AWS loaner$ terraform apply

module.efm-db-cluster.data.terraform_remote_state.SR: Refreshing state...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.efm-db-cluster.null_resource.master will be created

+ resource "null_resource" "master" {

+ id = (known after apply)

+ triggers = {

+ "path" = "./DB_Cluster_AWS"

}

}

# module.efm-db-cluster.null_resource.removehostfile will be created

+ resource "null_resource" "removehostfile" {

+ id = (known after apply)

}

# module.efm-db-cluster.null_resource.slave1 will be created

+ resource "null_resource" "slave1" {

+ id = (known after apply)

+ triggers = {

+ "path" = "./DB_Cluster_AWS"

}

}

# module.efm-db-cluster.null_resource.slave2 will be created

+ resource "null_resource" "slave2" {

+ id = (known after apply)

+ triggers = {

+ "path" = "./DB_Cluster_AWS"

}

}

Plan: 4 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

module.efm-db-cluster.null_resource.slave2: Creating...

module.efm-db-cluster.null_resource.master: Creating...

module.efm-db-cluster.null_resource.slave1: Creating...

module.efm-db-cluster.null_resource.slave1: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.slave2: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.master: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.slave2 (local-exec): Executing: ["/bin/sh" "-c" "sleep 30"]

module.efm-db-cluster.null_resource.slave1 (local-exec): Executing: ["/bin/sh" "-c" "sleep 30"]

module.efm-db-cluster.null_resource.master (local-exec): Executing: ["/bin/sh" "-c" "echo '3.233.45.172 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' > EDB_EFM_SETUP/utilities/scripts/hosts"]

module.efm-db-cluster.null_resource.master: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.master (local-exec): Executing: ["/bin/sh" "-c" "echo '3.216.125.55 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' >> EDB_EFM_SETUP/utilities/scripts/hosts"]

module.efm-db-cluster.null_resource.master: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.master (local-exec): Executing: ["/bin/sh" "-c" "echo '35.153.144.7 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' >> EDB_EFM_SETUP/utilities/scripts/hosts"]

module.efm-db-cluster.null_resource.master: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.master (local-exec): Executing: ["/bin/sh" "-c" "sleep 30"]

module.efm-db-cluster.null_resource.master: Still creating... [10s elapsed]

module.efm-db-cluster.null_resource.slave1: Still creating... [10s elapsed]

module.efm-db-cluster.null_resource.slave2: Still creating... [10s elapsed]

module.efm-db-cluster.null_resource.slave1: Still creating... [20s elapsed]

module.efm-db-cluster.null_resource.master: Still creating... [20s elapsed]

module.efm-db-cluster.null_resource.slave2: Still creating... [20s elapsed]

module.efm-db-cluster.null_resource.master: Still creating... [30s elapsed]

module.efm-db-cluster.null_resource.slave1: Still creating... [30s elapsed]

module.efm-db-cluster.null_resource.slave2: Still creating... [30s elapsed]

module.efm-db-cluster.null_resource.slave1: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.master (local-exec): TASK [Copy fencing script] *****************************************************

module.efm-db-cluster.null_resource.master (local-exec): changed: [3.233.45.172]

module.efm-db-cluster.null_resource.master (local-exec): TASK [Modify fencing script] ***************************************************

module.efm-db-cluster.null_resource.master (local-exec): changed: [3.233.45.172] => (item={u'To': u'3.233.45.172', u'From': u'eip'})

module.efm-db-cluster.null_resource.master (local-exec): changed: [3.233.45.172] => (item={u'To': u'us-east-1', u'From': u'region-name'})

module.efm-db-cluster.null_resource.master (local-exec): TASK [Start EFM service] *******************************************************

module.efm-db-cluster.null_resource.master: Still creating... [2m10s elapsed]

module.efm-db-cluster.null_resource.master (local-exec): changed: [3.233.45.172]

module.efm-db-cluster.null_resource.master (local-exec): TASK [Make service persistance when DB engine is epas] *************************

module.efm-db-cluster.null_resource.master (local-exec): changed: [3.233.45.172] => (item=efm-3.8)

module.efm-db-cluster.null_resource.master (local-exec): changed: [3.233.45.172] => (item=edb-as-12)

module.efm-db-cluster.null_resource.master (local-exec): TASK [Make service persistance when DB engine is pg] ***************************

module.efm-db-cluster.null_resource.master (local-exec): skipping: [3.233.45.172]

module.efm-db-cluster.null_resource.master (local-exec): PLAY RECAP *********************************************************************

module.efm-db-cluster.null_resource.master (local-exec): 3.233.45.172 : ok=17 changed=16 unreachable=0 failed=0 skipped=9 rescued=0 ignored=0

module.efm-db-cluster.null_resource.master: Creation complete after 2m14s [id=5627097035560517832]

module.efm-db-cluster.null_resource.removehostfile: Creating...

module.efm-db-cluster.null_resource.removehostfile: Provisioning with 'local-exec'...

module.efm-db-cluster.null_resource.removehostfile (local-exec): Executing: ["/bin/sh" "-c" "rm -rf EDB_EFM_SETUP/utilities/scripts/hosts"]

module.efm-db-cluster.null_resource.removehostfile: Creation complete after 0s [id=4754872144954597651]

Apply complete! Resources: 4 added, 0 changed, 0 destroyed.

laptop154-ma-us:EFM_Setup_AWS loaner$

laptop154-ma-us:EFM_Setup_AWS loaner$

laptop154-ma-us:EFM_Setup_AWS loaner$

|

Check EFM setup for the PostgreSQL cluster

As Terraform outputs the Master and Standby node IPs, you can ssh into any of the nodes (either Master or Standby nodes) and check for the EFM status. I am going to ssh into the Master instance and confirm the EFM setup that was done by Terraform for me.

laptop154-ma-us:Downloads loaner$ ssh -i pgdeploy.pem centos@3.233.45.172

Last login: Wed Mar 4 13:45:56 2020 from c-73-253-67-129.hsd1.ma.comcast.net

[centos@ip-172-31-65-116 ~]$ sudo -i

[root@ip-172-31-65-116 ~]#

[root@ip-172-31-65-116 ~]#

[root@ip-172-31-65-116 ~]# cd /usr/edb/efm-3.8/bin/

[root@ip-172-31-65-116 bin]#

[root@ip-172-31-65-116 bin]#

[root@ip-172-31-65-116 bin]# ls -ll

total 44

-rwxr-xr-x. 1 root root 386 Jan 6 06:23 efm

-rwxr-xr-x. 1 root root 1832 Jan 6 06:23 efm_address

-rwxr-xr-x. 1 root root 20246 Jan 6 06:23 efm_db_functions

-rwxr-xr-x. 1 root root 4630 Jan 6 06:23 efm_root_functions

-rwxr-xr-x. 1 root root 1481 Jan 6 06:23 runefm.sh

-rwxr-xr-x. 1 root root 2514 Jan 6 06:23 runJavaApplication.sh

drwxr-x---. 2 root efm 24 Mar 2 16:11 secure

[root@ip-172-31-65-116 bin]# ./efm cluster-status efm

Cluster Status: efm

Agent Type Address Agent DB VIP

-----------------------------------------------------------------------

Standby 172.31.3.196 UP UP

Master 172.31.65.116 UP UP

Standby 172.31.75.72 UP UP

Allowed node host list:

172.31.65.116 172.31.3.196 172.31.75.72 172.31.11.255

Membership coordinator: 172.31.65.116

Standby priority host list:

172.31.75.72 172.31.3.196

Promote Status:

DB Type Address WAL Received LSN WAL Replayed LSN Info

---------------------------------------------------------------------------

Master 172.31.65.116 0/903A0B0

Standby 172.31.75.72 0/903A0B0 0/903A0B0

Standby 172.31.3.196 0/903A0B0 0/903A0B0

Standby database(s) in sync with master. It is safe to promote.

[root@ip-172-31-65-116 bin]#

|

Step 3: The PEM Server is set up for monitoring the database. PEM agents are monitoring the database nodes and sending the data to the PEM server.

PEM Server

The PEM monitoring server is used to monitor DB servers. In this section, we will walk you through steps of setting up the PEM server, then we will set up PEM agents on the database cluster that was created in Step 1.

Go inside the folder PEM_Server_AWS and edit the file edb_pemserver_input.tf using your preferred editor to input the values for the mandatory parameters listed in the file and save it.

If you need any help filling in any of the parameters please check out the GitHub Wiki page, which describes each parameter in detail. The optional parameters gives you an option to fill any customized value you want or to use the default values included in the input file.

laptop154-ma-us:postgres-deployments loaner$ ls -ll

total 8

drwxr-xr-x 11 loaner staff 352 Mar 2 09:32 DB_Cluster_AWS

drwxr-xr-x 11 loaner staff 352 Mar 2 09:35 DB_Cluster_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:05 EFM_Setup_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:21 EFM_Setup_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:06 Expand_DB_Cluster_AWS

drwxr-xr-x 7 loaner staff 224 Feb 11 12:27 Expand_DB_Cluster_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:04 PEM_Agent_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:21 PEM_Agent_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:04 PEM_Server_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:22 PEM_Server_VMWARE

laptop154-ma-us:postgres-deployments loaner$

laptop154-ma-us:postgres-deployments loaner$

laptop154-ma-us:postgres-deployments loaner$ cd PEM_Server_AWS/

laptop154-ma-us:PEM_Server_AWS loaner$

laptop154-ma-us:PEM_Server_AWS loaner$

laptop154-ma-us:PEM_Server_AWS loaner$ ls -ll

total 96

drwxr-xr-x 7 loaner staff 224 Jan 28 10:28 EDB_PEM_Server

-rw-r--r-- 1 loaner staff 1535 Jan 28 10:28 README.md

-rw-r--r-- 1 loaner staff 19342 Jan 28 10:28 ansible.cfg

-rw-r--r-- 1 loaner staff 1680 Feb 12 12:33 edb_pemserver_input.tf

-rw-r--r-- 1 loaner staff 1447 Feb 11 12:27 licence.txt

-rw-r--r-- 1 loaner staff 158 Feb 27 13:04 terraform.tfstate

-rw-r--r-- 1 loaner staff 9674 Feb 27 13:04 terraform.tfstate.backup

laptop154-ma-us:PEM_Server_AWS loaner$

|

Deploy

Run the following command to setup up a PEM server that will monitor the database cluster created in Step 1.

terraform init

laptop154-ma-us:PEM_Server_AWS loaner$ terraform init

Initializing modules...

Initializing the backend...

Initializing provider plugins...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.aws: version = "~> 2.48"

* provider.null: version = "~> 2.1"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

laptop154-ma-us:PEM_Server_AWS loaner$

laptop154-ma-us:PEM_Server_AWS loaner$

|

terraform apply

This command will start creating resources. This will prompt you to enter the AWS region. Provide the region code (such as eg us-east-1) and hit Enter. The next prompt will ask your confirmation to create the resource. Type Yes and hit the Enter key. Terraform start the creation of your resource, and the configuration of the PEM server will be done using Ansible.

Once this is complete you will see the PEM server IP as an output displayed on your screen:

|

laptop154-ma-us:PEM_Server_AWS loaner$ terraform apply

var.region_name

AWS Region Code like us-east-1,us-west-1

Enter a value: us-east-1

module.edb-pem-server.data.aws_ami.centos_ami: Refreshing state...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.edb-pem-server.aws_instance.EDB_Pem_Server will be created

+ resource "aws_instance" "EDB_Pem_Server" {

+ ami = "ami-0c3b960f8440c7d71"

+ arn = (known after apply)

+ associate_public_ip_address = (known after apply)

+ availability_zone = (known after apply)

+ cpu_core_count = (known after apply)

+ cpu_threads_per_core = (known after apply)

+ get_password_data = false

+ host_id = (known after apply)

+ id = (known after apply)

+ instance_state = (known after apply)

+ instance_type = "t2.micro"

+ ipv6_address_count = (known after apply)

+ ipv6_addresses = (known after apply)

+ key_name = "pgdeploy"

+ network_interface_id = (known after apply)

+ password_data = (known after apply)

+ placement_group = (known after apply)

+ primary_network_interface_id = (known after apply)

+ private_dns = (known after apply)

+ private_ip = (known after apply)

+ public_dns = (known after apply)

+ public_ip = (known after apply)

+ security_groups = (known after apply)

+ source_dest_check = true

+ subnet_id = "subnet-e0621bef"

+ tags = {

+ "Created_By" = "Terraform"

+ "Name" = "edb-pem-server"

}

+ tenancy = (known after apply)

+ volume_tags = (known after apply)

+ vpc_security_group_ids = (known after apply)

+ ebs_block_device {

+ delete_on_termination = (known after apply)

+ device_name = (known after apply)

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ snapshot_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = (known after apply)

+ volume_type = (known after apply)

}

+ ephemeral_block_device {

+ device_name = (known after apply)

+ no_device = (known after apply)

+ virtual_name = (known after apply)

}

+ network_interface {

+ delete_on_termination = (known after apply)

+ device_index = (known after apply)

+ network_interface_id = (known after apply)

}

+ root_block_device {

+ delete_on_termination = true

+ encrypted = (known after apply)

+ iops = (known after apply)

+ kms_key_id = (known after apply)

+ volume_id = (known after apply)

+ volume_size = 8

+ volume_type = "gp2"

}

}

# module.edb-pem-server.aws_security_group.edb_pem_sg[0] will be created

+ resource "aws_security_group" "edb_pem_sg" {

+ arn = (known after apply)

+ description = "Rule for edb-terraform-resource"

+ egress = [

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 0

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "-1"

+ security_groups = []

+ self = false

+ to_port = 0

},

]

+ id = (known after apply)

+ ingress = [

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 22

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 22

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 5432

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 5432

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 5444

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 5444

},

+ {

+ cidr_blocks = [

+ "0.0.0.0/0",

]

+ description = ""

+ from_port = 8443

+ ipv6_cidr_blocks = []

+ prefix_list_ids = []

+ protocol = "tcp"

+ security_groups = []

+ self = false

+ to_port = 8443

},

]

+ name = "edb_pemsecurity_group"

+ owner_id = (known after apply)

+ revoke_rules_on_delete = false

+ vpc_id = "vpc-de8457a4"

}

# module.edb-pem-server.null_resource.removehostfile will be created

+ resource "null_resource" "removehostfile" {

+ id = (known after apply)

}

Plan: 3 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

module.edb-pem-server.aws_security_group.edb_pem_sg[0]: Creating...

module.edb-pem-server.aws_security_group.edb_pem_sg[0]: Creation complete after 2s [id=sg-0ddb1eb11366a6801]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Creating...

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [10s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [20s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [30s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Provisioning with 'local-exec'...

module.edb-pem-server.aws_instance.EDB_Pem_Server (local-exec): Executing: ["/bin/sh" "-c" "echo '3.227.217.117 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' > EDB_PEM_Server/utilities/scripts/hosts"]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Provisioning with 'local-exec'...

module.edb-pem-server.aws_instance.EDB_Pem_Server (local-exec): Executing: ["/bin/sh" "-c" "sleep 60"]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [40s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [50s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [1m0s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server: Still creating... [1m10s elapsed]

module.edb-pem-server.aws_instance.EDB_Pem_Server (local-exec): PLAY RECAP *********************************************************************

module.edb-pem-server.aws_instance.EDB_Pem_Server (local-exec): 3.227.217.117 : ok=13 changed=12 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

module.edb-pem-server.aws_instance.EDB_Pem_Server: Creation complete after 5m19s [id=i-07ff864f6b357f752]

module.edb-pem-server.null_resource.removehostfile: Creating...

module.edb-pem-server.null_resource.removehostfile: Provisioning with 'local-exec'...

module.edb-pem-server.null_resource.removehostfile (local-exec): Executing: ["/bin/sh" "-c" "rm -rf EDB_PEM_Server/utilities/scripts/hosts"]

module.edb-pem-server.null_resource.removehostfile: Creation complete after 0s [id=1836518445024565325]

Apply complete! Resources: 3 added, 0 changed, 0 destroyed.

Outputs:

PEM_SERVER_IP = 3.227.217.117

laptop154-ma-us:PEM_Server_AWS loaner$

|

|

|

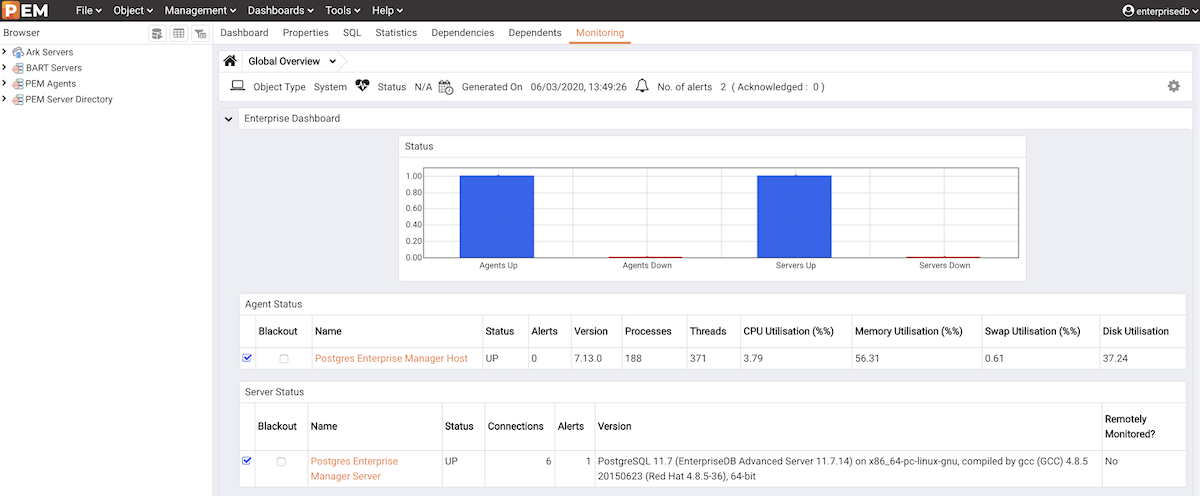

Access the PEM server

Open the browser, input the PEM URL (https://<ip_address_of_PEM_host>:8443/pem), and hit Enter. In my case, I entered the PEM server IP that Terraform gave above: 3.227.217.117. The default username that I had kept is Username: EnterpriseDB, Password: [The DB password you entered in db_password field].

Register PEM Agent with PEM server

To start monitoring DB servers created earlier with PEM monitoring servers we need to register an agent (pemagent) with it. Here we will explain how to register a PEM agent with a PEM server.

Prerequisites:

1. DB cluster created using EDB deployment scripts.

2. PEM server created using EDB deployment scripts.

Go inside folder PEM_Agent_AWS and edit the file edb_pemagent_input.tf using your preferred editor to input the values for the mandatory parameters listed in the file and save it.

If you need any help filling in any of the parameters please check out the GitHub Wiki page, which describes each parameter in detail. The optional parameters gives you an option to fill any customized value you want or to use the default values included in the input file.

laptop154-ma-us:postgres-deployments loaner$ ls -ll

total 8

drwxr-xr-x 10 loaner staff 320 Mar 2 09:43 DB_Cluster_AWS

drwxr-xr-x 10 loaner staff 320 Mar 2 09:44 DB_Cluster_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:05 EFM_Setup_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:21 EFM_Setup_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:06 Expand_DB_Cluster_AWS

drwxr-xr-x 7 loaner staff 224 Feb 11 12:27 Expand_DB_Cluster_VMWARE

drwxr-xr-x 10 loaner staff 320 Feb 27 13:04 PEM_Agent_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:21 PEM_Agent_VMWARE

drwxr-xr-x 10 loaner staff 320 Mar 2 09:51 PEM_Server_AWS

drwxr-xr-x 10 loaner staff 320 Feb 27 14:22 PEM_Server_VMWARE

-rw-r--r-- 1 loaner staff 157 Feb 12 11:11 terraform.tfstate

laptop154-ma-us:postgres-deployments loaner$

laptop154-ma-us:postgres-deployments loaner$

laptop154-ma-us:postgres-deployments loaner$ cd PEM_Agent_AWS/

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$ ls -ll

total 80

drwxr-xr-x 7 loaner staff 224 Jan 28 10:28 EDB_PEM_AGENT

-rw-r--r-- 1 loaner staff 1544 Jan 28 10:28 README.md

-rw-r--r-- 1 loaner staff 19342 Jan 28 10:28 ansible.cfg

-rw-r--r-- 1 loaner staff 991 Feb 12 12:46 edb_pemagent_input.tf

-rw-r--r-- 1 loaner staff 1447 Feb 11 12:27 licence.txt

-rw-r--r-- 1 loaner staff 158 Feb 27 13:04 terraform.tfstate

-rw-r--r-- 1 loaner staff 3977 Feb 27 13:04 terraform.tfstate.backup

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$

|

Deploy

Run the command below to start the process of registering a PEM agent with the PEM server:

terraform init

laptop154-ma-us:postgres-deployments loaner$ cd PEM_Agent_AWS/

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$ ls -ll

total 80

drwxr-xr-x 7 loaner staff 224 Jan 28 10:28 EDB_PEM_AGENT

-rw-r--r-- 1 loaner staff 1544 Jan 28 10:28 README.md

-rw-r--r-- 1 loaner staff 19342 Jan 28 10:28 ansible.cfg

-rw-r--r-- 1 loaner staff 991 Feb 12 12:46 edb_pemagent_input.tf

-rw-r--r-- 1 loaner staff 1447 Feb 11 12:27 licence.txt

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$ terraform init

Initializing modules...

Initializing the backend...

Initializing provider plugins...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.null: version = "~> 2.1"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

laptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$

|

terraform apply

This will prompt you to enter Yes. Type Yes and hit Enter. This will start the process of registering a PEM agent with the PEM server, and after it completes you will see a resource added message on the display.

aptop154-ma-us:PEM_Agent_AWS loaner$

laptop154-ma-us:PEM_Agent_AWS loaner$ terraform apply

module.edb-pem-agent.data.terraform_remote_state.PEM_SERVER: Refreshing state...

module.edb-pem-agent.data.terraform_remote_state.DB_CLUSTER: Refreshing state...

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.edb-pem-agent.null_resource.configurepemagent will be created

+ resource "null_resource" "configurepemagent" {

+ id = (known after apply)

+ triggers = {

+ "path" = "./PEM_Server_AWS"

}

}

# module.edb-pem-agent.null_resource.removehostfile will be created

+ resource "null_resource" "removehostfile" {

+ id = (known after apply)

}

Plan: 2 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

module.edb-pem-agent.null_resource.configurepemagent: Creating...

module.edb-pem-agent.null_resource.configurepemagent: Provisioning with 'local-exec'...

module.edb-pem-agent.null_resource.configurepemagent (local-exec): Executing: ["/bin/sh" "-c" "echo '3.233.45.172 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' > EDB_PEM_AGENT/utilities/scripts/hosts"]

module.edb-pem-agent.null_resource.configurepemagent: Provisioning with 'local-exec'...

module.edb-pem-agent.null_resource.configurepemagent (local-exec): Executing: ["/bin/sh" "-c" "echo '3.216.125.55 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' >> EDB_PEM_AGENT/utilities/scripts/hosts"]

module.edb-pem-agent.null_resource.configurepemagent: Provisioning with 'local-exec'...

module.edb-pem-agent.null_resource.configurepemagent (local-exec): Executing: ["/bin/sh" "-c" "echo '35.153.144.7 ansible_ssh_private_key_file=/Users/loaner/Downloads/pgdeploy.pem' >> EDB_PEM_AGENT/utilities/scripts/hosts"]

module.edb-pem-agent.null_resource.configurepemagent: Provisioning with 'local-exec'...

module.edb-pem-agent.null_resource.configurepemagent (local-exec): Executing: ["/bin/sh" "-c" "sleep 30"]

module.edb-pem-agent.null_resource.configurepemagent: Still creating... [10s elapsed]

module.edb-pem-agent.null_resource.configurepemagent: Still creating... [20s elapsed]

module.edb-pem-agent.null_resource.configurepemagent: Still creating... [30s elapsed]

module.edb-pem-agent.null_resource.configurepemagent: Provisioning with 'local-exec'...

module.edb-pem-agent.null_resource.configurepemagent (local-exec): TASK [Register pem agent] ******************************************************

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [3.233.45.172]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [35.153.144.7]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [3.216.125.55]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): TASK [Register agent on pemserver UI] ******************************************

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [35.153.144.7]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [3.216.125.55]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [3.233.45.172]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): TASK [Register agent on pemserver UI] ******************************************

module.edb-pem-agent.null_resource.configurepemagent (local-exec): skipping: [3.233.45.172]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): skipping: [3.216.125.55]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): skipping: [35.153.144.7]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): TASK [Restart pem agent] *******************************************************

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [3.233.45.172]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [3.216.125.55]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): changed: [35.153.144.7]

module.edb-pem-agent.null_resource.configurepemagent (local-exec): PLAY RECAP *********************************************************************

module.edb-pem-agent.null_resource.configurepemagent (local-exec): 3.216.125.55 : ok=10 changed=9 unreachable=0 failed=0 skipped=7 rescued=0 ignored=0

module.edb-pem-agent.null_resource.configurepemagent (local-exec): 3.233.45.172 : ok=10 changed=9 unreachable=0 failed=0 skipped=7 rescued=0 ignored=0

module.edb-pem-agent.null_resource.configurepemagent (local-exec): 35.153.144.7 : ok=10 changed=9 unreachable=0 failed=0 skipped=7 rescued=0 ignored=0

module.edb-pem-agent.null_resource.configurepemagent: Creation complete after 56s [id=1284715281255921362]

module.edb-pem-agent.null_resource.removehostfile: Creating...

module.edb-pem-agent.null_resource.removehostfile: Provisioning with 'local-exec'...

module.edb-pem-agent.null_resource.removehostfile (local-exec): Executing: ["/bin/sh" "-c" "rm -rf EDB_PEM_AGENT/utilities/scripts/hosts"]

module.edb-pem-agent.null_resource.removehostfile: Creation complete after 0s [id=6068360084165743452]

Apply complete! Resources: 2 added, 0 changed, 0 destroyed.

laptop154-ma-us:PEM_Agent_AWS

|

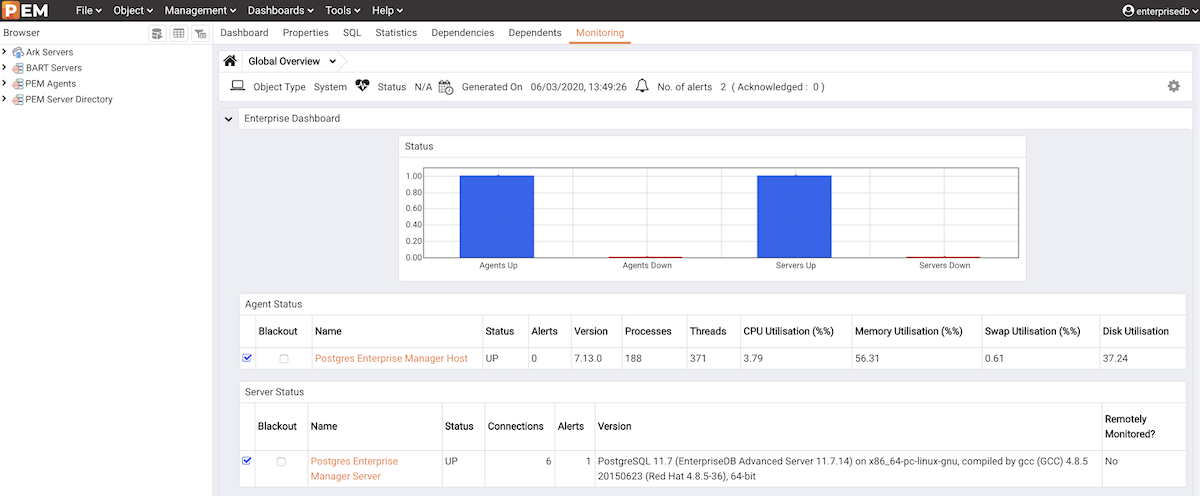

Confirm the PEM server for the PEM agents setup

Open the browser, input the PEM URL (https://<ip_address_of_PEM_host>:8443/pem), and hit Enter. In my case I entered the PEM server IP that Terraform gave above: 3.227.217.117. The default username that I had kept is Username: enterprisedb, Password: [The DB password you entered in db_password field].

Resource Cleanup

You can always clean up the resources using a destroy command. Terraform’s destroy command can be used to destroy the Terraform-managed infrastructure.

terraform destroy

This should clean up all the resources that Terraform created.