High availability is one of the key drivers of Postgres adoption. However, taking full advantage of its benefits requires ensuring that your Postgres database can safely and efficiently failover in case of an outage.

Enterprise Failover Manager (EFM) is a tool for managing Postgres database clusters, enabling high availability of primary-standby deployment architectures using streaming replication. Failover Manager provides a Postgres primary database node automatic failover to a standby database node in the event of a software or hardware failure.

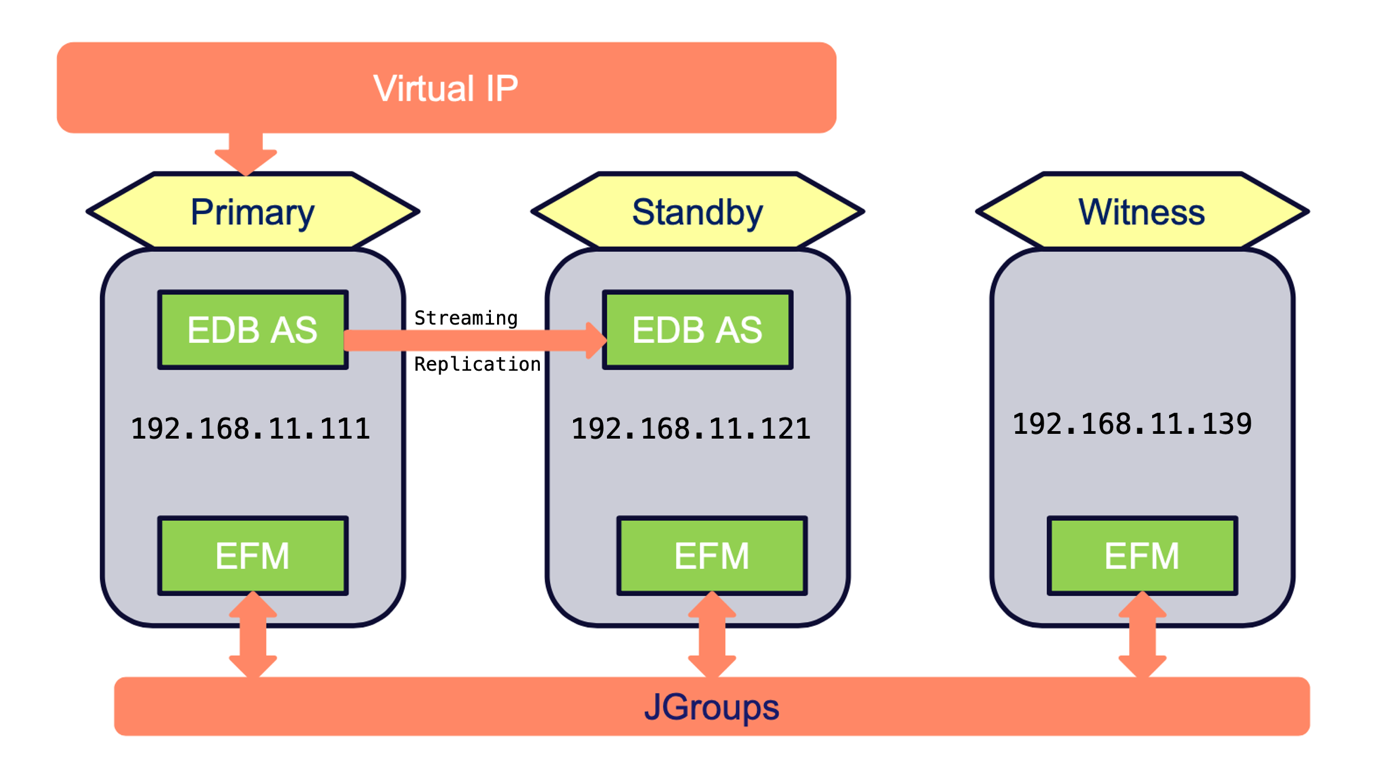

In this document, we considered one primary, one standby and a witness node in the EFM cluster as per below architecture. In case there are more than one standby databases we may skip witness node.

Environment Details

| OS Version | CentOS |

|---|---|

| Java | 1.8 |

| PostgreSQL | 14.4 |

| EFM | 4.4 |

| Primary Server | 192.168.11.111 |

| Standby Server | 192.168.11.121 |

| Witness Server | 192.168.11.139 |

Below are the few prerequisites for deployment of EDB's Enterprise Failover Manager (EFM) for achieving high availability,

- Java environment to be setup on each server

- SMTP server to be running/port 25 to be opened for all servers to send mail notification in case of any change in setup, failure of node or failover.

- Allotment of Virtual IP(VIP) for making connection between Application and DB Server

- All the servers in EFM cluster must be in the same segment/VLAN

- Witness node of EFM for checking the heartbeat of DB servers/other EFM services and to avoid split brain syndrome. (optional if having two or more standby)

- EFM binaries to be installed on all database servers

- Setting up of Streaming Replication (between primary and standby)

- Entry to be made of all servers which are part of EFM cluster in pg_hba.conf file of each database server

In addition to the above architecture, there are other EDB supported architectures including pgPool/Pgbouncer for connection pooling and load balancing in on premise or cloud environments. Please refer to our documentation.

Setting up streaming replication

As per the given architecture, we need to setup streaming replication between 192.168.11.111 and 192.168.11.121. We may use pg_basebackup to set up streaming replication. Below command to be executed from the standby server.

bash-4.4$ /usr/pgsql-14/bin/pg_basebackup -D /var/lib/pgsql/14/data/ -h 192.168.11.121 -p 5432 -Xs -R -P

Where -D = data directory at standby server

-h = IP address of primary server

-p = Port on which primary instance is running

-Xs = WAL method - stream

-P = Progress information

-R = Write configuration parameters for replication

Please refer to this article for more details for setting up streaming replication.

After setting up streaming replication, we may use pg_stat_replication on primary database instance to verify the replication status.

postgres=# select * from pg_stat_replication;

-[ RECORD 1 ]----+---------------------------------

pid | 53474

usesysid | 10

usename | postgres

application_name | walreceiver

client_addr | 192.168.11.121

client_hostname |

client_port | 40118

backend_start | 2023-01-17 13:59:15.855432+05:30

backend_xmin |

state | streaming

sent_lsn | 0/ED000D00

write_lsn | 0/ED000D00

flush_lsn | 0/ED000D00

replay_lsn | 0/ED000D00

write_lag |

flush_lag |

replay_lag |

sync_priority | 0

sync_state | async

reply_time | 2023-01-17 14:08:11.229453+05:30

EFM installation and configuration

[root@lab1 ~]# yum install java edb-efm44

[root@lab1 ~]# chown efm:efm -R /etc/edb/efm-4.4

[root@lab1 ~]# echo 'export PATH=/usr/edb/efm-4.4/bin:$PATH' >> .bash_profile

[root@lab1 efm-4.4]# source ~/.bash_profile

[root@lab1 ~]# cd /etc/edb/efm-4.4/

[root@lab1 ~]# cp efm.properties.in efm.properties

[root@lab1 ~]# cp efm.nodes.in efm.nodes

[root@lab1 efm-4.4]# ll

total 64

-rwxrwxrwx. 1 efm efm 199 Dec 12 16:23 efm.nodes

-rw-r--r--. 1 efm efm 139 Mar 19 2021 efm.nodes.in

-rw-r--r--. 1 efm efm 28145 Dec 12 16:21 efm.properties

-rw-r--r--. 1 efm efm 27859 Mar 19 2021 efm.properties.in

Generate the encrypted EFM password

[root@lab1 efm-4.4]# efm encrypt efm

This utility will generate an encrypted password for you to place in your

EFM cluster property file: /etc/edb/efm-4.4/efm.properties

Please enter the password and hit enter:

Please enter the password again to confirm:

The encrypted password is: d6cad98d315dfbda601139d2c1b49068

Please paste this into your efm.properties file

db.password.encrypted=d6cad98d315dfbda601139d2c1b49068

Below are the most common parameters which need to be defined in the efm.properties file based on your environment. efm.properties file should be identical across all database servers except the parameter bind.address which should be specific to the server.

db.user=postgres

db.password.encrypted=d6cad98d315dfbda601139d2c1b49068

db.port=5432

db.database=postgres

db.service.owner=postgres

db.service.name=postgresql-14.service

db.bin=/usr/pgsql-14/bin

db.data.dir=/var/lib/pgsql/14/data

user.email=manish.yadav@enterprisedb.com

from.email=efm-info@enterprisedb.com

notification.level=INFO

bind.address=192.168.11.111:7800

admin.port=7800

is.witness=false

ping.server.ip=192.168.11.1

ping.server.command=/bin/ping -q -c3 -w5

auto.allow.hosts=true

stable.nodes.file=true

virtual.ip=192.168.11.151

virtual.ip.interface=ens33

virtual.ip.prefix=255.255.255.0

For the Witness server, we may skip database related(db.*) parameters and need to enable the below parameter:

is.witness=trueWe need to make changes in the efm.nodes file by including IP addresses and port of all servers which are part of the EFM cluster. Below is the sample file.

[root@lab1 efm-4.4]# cat efm.nodes

# List of node address:port combinations separated by whitespace.

# The list should include at least the membership coordinator's address.

192.168.11.121:7800 192.168.11.111:7800 192.168.11.139:7800

Start EFM services Once we are done with streaming replication and defining configuration at EFM level, we are good to start the EFM services across all EFM cluster nodes preferably starting with a primary database instance.

[root@lab1 ~]# systemctl start edb-efm-4.4.service && systemctl enable edb-efm-4.4.service

Verify EFM cluster status

[root@lab1 ~]# efm cluster-status efm

Cluster Status: efm

Agent Type Address DB VIP

----------------------------------------------------------------

Primary 192.168.11.111 UP 192.168.11.151*

Standby 192.168.11.121 UP 192.168.11.151

Witness 192.168.11.139 N/A 192.168.11.151

Allowed node host list:

192.168.11.139 192.168.11.121 192.168.11.111

Membership coordinator: 192.168.11.111

Standby priority host list:

192.168.11.121

Promote Status:

DB Type Address WAL Received LSN WAL Replayed LSN Info

-------------------------------------------------------------------------

Primary 192.168.11.111 0/ED0001B8

Standby 192.168.11.121 0/ED0001B8 0/ED0001B8

Standby database(s) in sync with primary. It is safe to promote.

Performing Switchover

There is no manual intervention required while performing switchover, role reversal between primary and latest standby will take place and remaining standbys will be automatically configured to point to the primary server.

[root@lab1 ~]# efm promote efm -switchover

[root@lab1 ~]# efm cluster-status efm

Cluster Status: efm

Agent Type Address DB VIP

----------------------------------------------------------------

Standby 192.168.11.111 UP 192.168.11.151

Primary 192.168.11.121 UP 192.168.11.151*

Witness 192.168.11.139 N/A 192.168.11.151

Allowed node host list:

192.168.11.139 192.168.11.121 192.168.11.111

Membership coordinator: 192.168.11.111

Standby priority host list:

192.168.11.111

Promote Status:

DB Type Address WAL Received LSN WAL Replayed LSN Info

-------------------------------------------------------------------------

Primary 192.168.11.121 0/EC000D00

Standby 192.168.11.111 0/EC000D00 0/EC000D00

Standby database(s) in sync with primary. It is safe to promote.

Failover scenarios

EFM will perform the failover and promote the latest standby database if the primary database is not available for any reason like server crash, network break, etc. In such a case, old primary needs to be restored back in the EFM cluster manually either using pg_rewind or pg_basebackup utilities.

Add Node to the EFM cluster: To add a new node to the cluster, execute below commands from any of the existing node.

[root@lab1 ~]# efm allow-node efm <ip addr of new machine>Resume monitoring previously stopped database:

[root@lab1 ~]# efm resume efm

Steps to start/stop EFM cluster

In case we are required to stop EFM services across all nodes for maintenance purposes, it is suggested to use stop-cluster command which would stop EFM services on all nodes.

[root@lab1 ~]# efm stop-cluster efmAlternatively to start/stop PostgreSQL/EFM services, there is need to follow the below steps in sequential order.

Steps to stop PostgreSQL & EFM services

- On Standby Node

- Stop EFM Services

- Stop PostgreSQL Service

- On Primary Node

- Stop EFM Services

- Stop PostgreSQL Service

Steps to start PostgreSQL & EFM services

- On Primary Node

- Start EFM Services

- Start PostgreSQL Service

- On Standby Node

- Start EFM Services

- Start PostgreSQL Service

EDB shared supported scenarios for failover by EFM on our site.

It is recommended to test the failover scenarios in a dev environment prior to Go-Live.

This document is a general guideline and there might be changes required in steps based on the deployment environment.

Achieve consistent high availability with EFM

You deserve a database that’s Always On. Thanks to EFM, you can achieve that. Keep your applications running, your teams productive and your customers happy with high availability everyone can trust.