TOAST stands for The Oversized-Attribute Storage Technique.

EDB Postgres and PostgreSQL use a fixed page size (commonly 8 KB), and does not allow tuples to span multiple pages. Therefore, it is not possible to store very large field values directly.

When a row is attempted to be stored that exceeds this size, TOAST basically breaks up the data of large columns into smaller "pieces" and stores them into a TOAST table.

Almost every table you create has its own associated (unique) TOAST table, which may or may not ever end up being used, depending on the size of rows you insert. A table with only fixed-width columns like integers may not have an associated toast table.

All of this is transparent to the user and enabled by default.

The mechanism is accomplished by splitting up the large column entry into approximately 2 KB bytes and storing them as chunks in the TOAST tables. It then stores the length and a pointer to the TOAST entry back where the column is normally stored. Because of how the pointer system is implemented, most TOASTable column types are limited to a max size of approximately 1GB.

TOAST has a number of advantages compared to a more straightforward approach such as allowing row values to span pages. The big values of TOASTed attributes will only be pulled out

(if selected at all) at the time the result set is sent to the client.

For example, in a query like SELECT * FROM a, b WHERE a.big = b.big and a.x = 1 and b.y = 1, scans of the individual tables can be performed without detoasting the big fields, however the join will be performed with detoasting. The table itself will be much smaller and more of its rows fit in the shared buffer cache than would be the case without any out-of-line storage (TOAST). It's also more likely that the sort sets get smaller which imply having sorts being done entirely in memory.

There is more to be said about TOAST like that it also provides compression. TOAST makes it

transparent to the user but keep in mind that TOAST-able column has a limit 1GB.

Large Objects using BYTEA/TEXT (TOAST)

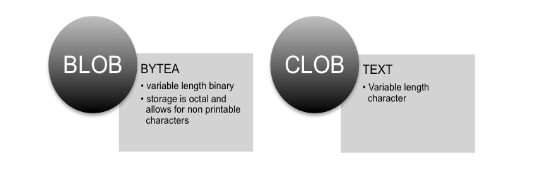

In Postgres, the simplest representation of how LOBs are handled is shown below, where BLOBs are equivalent to the BYTEA data type and CLOBs are equivalent to the TEXT data type:

Since EDB Postgres supports toasted variable length fields such as varchar, bytea, text, all of those fields are considered eligible for “toasting”.

With the use of “toasting” the large object in EDB Postgres becomes a snap and are handled under the covers.

Large Objects using BLOB/CLOB

Large Objects can also be handled in a more conventional manner using data types CLOB and BLOB. In Postgres, these data types are stored in a single system table called 'pg_largeobject' which has to be accessed via identifiers of data type OID which are stored with the table using BLOB/CLOB data.

The catalog pg_largeobject holds the data making up “large objects”. A large object is identified by an OID assigned when it is created. Each large object is broken into segments or “pages” small enough to be conveniently stored as rows in pg_largeobject.

pg_largeobject

|

Name |

Type |

References |

Description |

|

loid |

oid |

Identifier of the large object that includes this page |

|

|

pageno |

int4 |

Page number of this page within its large object |

|

|

data |

bytea |

Actual data stored in the large object. |

All large object manipulation using these functions must take place within an SQL transaction block since large object file descriptors are only valid for the duration of a transaction.

Manipulating data with BLOBs and CLOBs can be accomplished with SQL functions, JDBC , ODBC, OCI , .NET and/or direct access to the Large Object API using libpq. Here are the available SQL functions for CLOB/BLOB manipulation:

- lo_creat()

- lo_create()

- lo_unlink()

- lo_import()

- lo_export()

Large Objects limits in Postgres

- No more than 32TB of large objects can be stored (reason: they are all stored in a single table named pg_largeobject, and the per-table size limit is 32TB (assuming default page size).

- No more than 2^32 large objects can be stored (reason: the primary key of pg_largeobject is an oid which is a 32-bit quantity).

- pg_largeobject cannot be partitioned (reason: because it's a system catalog)

- A large object cannot exceed 4TB for PostgreSQL 9.3 or newer or 2GB for older versions.

IMPORTANT NOTES:

- Since PostgreSQL considers a LO entry as an object, deleting or updating rows in the user table does not delete entries in pg_largeobjects. pg_largeobjects therefore grows infinitely unless a separate purging process is made.

- To prevent this, typically a trigger needs to be added which deletes entries in pg_largeobjects

- Since large objects are created independently from the table columns that reference the objects (unlike TOASTed objects), when you delete a row from the table that points to a large object, the large object is not deleted. Therefore, the management of those deleted objects should be built into your design (a trigger is one option).

Summary of Large Objects using BYTEA/TEXT vs. BLOB/CLOB

Now that we have a basic understanding of how large objects can be handled in Postgres, here is a brief but important summary of the different mechanisms for Large Objects (below):

|

BLOB/CLOB |

BYTEA/TEXT |

|

|

“TOAST”-able? |

||

|

Large Object API NOT required |

||

|

Transaction NOT required |

||

|

Can stream, and seek over entries |

||

|

Need to track OID. |

||

|

Object Storage Limit |

*4 GB |

1 GB |

*limited 4TB (PostgreSQL 9.3+) per entry, & 4 Billion per database

Side by Side Source code comparison using BYTEA vs. BLOB (using EDB JDBC Driver)

|

BYTEA |

BLOB |

|

CREATE TABLE images (imgname text, img bytea); |

CREATE TABLE imageslo (imgname text, imgoid oid); |

|

/* write the image */ File file = new File("myimage.gif"); /* Read the image */ PreparedStatement ps = conn.prepareStatement("SELECT img FROM images WHERE imgname = ?"); |

/* write the image */ // All LargeObject API calls must be within a transaction block |